Abstract

In this chapter, several technological, or to be more detailed, robotic solutions from different companies and developers will be presented. This allows an overview of the state of Ambient/Active Assisted Living (AAL), which can be categorized into home care (for independent living in old age), social interaction, health and wellness, interaction and learning, working, and mobility.

In this chapter, several technological, or to be more detailed, robotic solutions from different companies and developers will be presented. This allows an overview of the state of Ambient/Active Assisted Living (AAL), which can be categorized into home care (for independent living in old age), social interaction, health and wellness, interaction and learning, working, and mobility.

4.1 Home Care (Independent Living)

Here, an overview about robotics which are used in the home care field is given. In Table 4.1, a short overview about the robots introduced in this section is presented.

Developer: NEC Corporation

PaPeRo is a “Personal Robot” best known for its recognizable appearance and facial recognition abilities (see Figure 4.1). PaPeRo, which stands for “Partner-type-Personal-Robot” was developed as a personal assistant that could interact with people in everyday life situations. It is equipped with a variety of abilities for interaction. For example, if asked, “Is today a good day for a drive?,” PaPeRo will autonomously connect to the internet, assess the weather forecast for the day, and provide a recommendation. It also has the ability to play games with people, provide music for a party, and imitate both in voice and movement. PaPeRo adapts to various personalities based on the types of interaction it receives (tone of voice, frequency of interaction, type of question asked, etc.) and develops a simulated mood based on the moods of the humans it interacts with. When not given any immediate tasks, PaPeRo roams around searching for faces and once one is found, will begin conversing with it.

Technology: PaPeRo’s distinguishing “eyes” are in fact two cameras that allow for its visual awareness and facial recognition system. A pair of sensitive microphones allow the system to determine where, and from whom, human speech is coming from using its speech recognition system and PaPeRo will react accordingly. It is also equipped with an ultrasound system in order to detect obstacles. If an object is in its path, the exact location of the object will be detected and a route avoiding the object will be designed and employed.

Developer: Center of IRT (CIRT), Tokyo University

Mamoru-Kun is an assistive robot for seniors (see Figure 4.1). The purpose of the robot is to remind users where they may have left often-misplaced items such as keys, glasses, or slippers. Reminders can be provided either verbally or the robot can physically point out the location of the items. Alternatively, it can communicate with its older brother, the “Home Assistance Robot,” and have it retrieve the desired items. Mamoru can also be programmed to provide everyday reminders such as taking one’s medication.

Technology: Mamoru is primarily an “Object-Recognition-Robot,” equipped with a wide-angle lens. It stands 40 cm tall and weighs 3.8 kg. It has four joints (two in the neck and one in each arm), a microphone, and speakers in order to communicate the location of lost items. In order for personal items to be identified and located, users must register their often-misplaced items in advance to create a sort of inventory for the robot. If the item is within the system’s field of view, object recognition software is able to identify it and inform the user of its location.

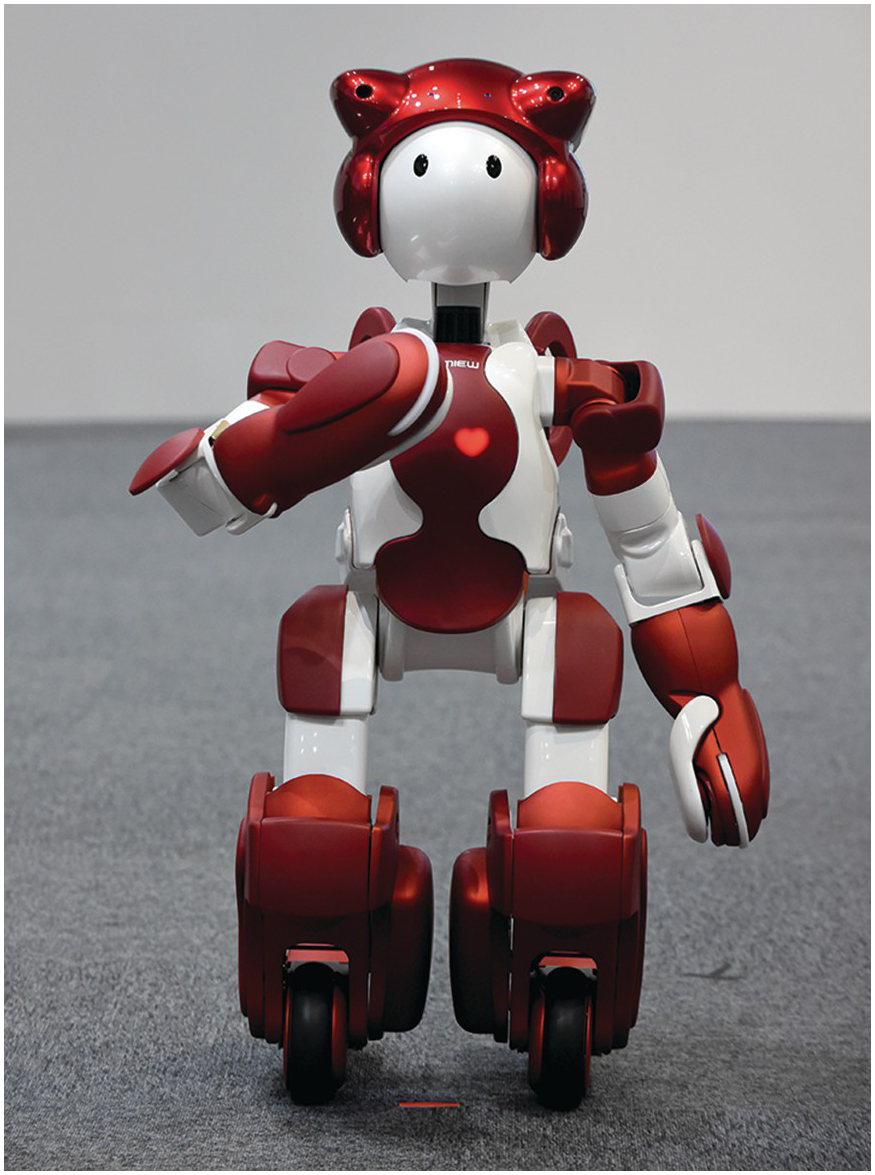

The second iteration of Emiew (Excellent Mobility and Interactive Existence as Workmate, see Figure 4.2) was developed by Hitachi. Emiew is a service robot with diverse communication abilities that could safely and comfortably coexist with humans while carrying out necessary services. Its communication is supported with a vocabulary of about 100 words. In order to achieve higher mobility speeds, Emiew was designed with two wheels as “feet” and can travel up to 6 km/h. At the time of development, the previous version of Emiew was the fastest moving service robot yet. With sensors on its head, waist, and near the base, Emiew is able to interact with users and follow various commands. Its speed and abilities make Emiew particularly useful in the office setting to assist in running various errands.

Technology: Emiew stands 80 cm tall and weighs just 13 kg, has a maximum speed of 6 km/h and an impressive acceleration of 4 m/s2. A specially developed two-wheel mechanism allows Emiew to travel at a rate comparable to humans. An “active suspension” system consisting of a spring and an actuator gives Emiew agility and the ability to roll over small differences in floor levels and various office obstacles such as cables. Emiew is equipped with no fewer than 14 microphones to allow for accurate voice detection including directionality. The robot is also equipped with noise-cancellation technology in order to filter out the noise created by the robot itself, and better focus on that of the users. A series of sensors allows the robot to acutely detect obstacles – either stationary or moving (e.g., people) – and efficiently navigate its way through them in order to quickly reach its destination.

System Name: Home Assistant AR

Developer: Center of IRT (CIRT), Tokyo University

AR (shown in Figure 4.2) is a humanoid robot that is able to help out with daily household chores. Its development focused on giving it the ability to make use of tools, equipment, and appliances that were designed under the assumption of human use. The goal of AR was to be able to silently and autonomously clean within the home. AR can clean up a storage room, sweep and mop floors, remove dishes from the kitchen table and insert them into the dishwasher, open and close doors, and even do the laundry. The robot can even move furniture in order to clean underneath it and when it is finished, put the furniture back in its original location. Its mobility is provided by a two-wheel base that implements a simpler mechanism than the more intricate “leg-based” systems. The robot also has the ability to perform multiple consecutive tasks rather than dealing with each one separately and requiring additional instructions after each completed task.

Technology: The Home Assistant AR stands at 160 cm tall and weighs approximately 130 kg. It uses a total of five cameras and six lasers in order to map out and efficiently navigate the home. A range finder also gives AR the ability to judge distances to obstacles. Based on its self-created 3D geometries of the home, it can execute various complex movements. The robot is equipped with a total of 32 joints (three in the neck and head, seven in each arm, six in each hand, one in the hips, and two in the wheel base) in order to provide it with the flexibility to complete various tasks originally designed for humans. For instance, the neck alone can move in three directions while the arm can move in an impressive seven. It can also assess whether a job it completed was successful, and if determined unsuccessful, repeat the job. The robot can work a total of about 30–60 minutes per charge.

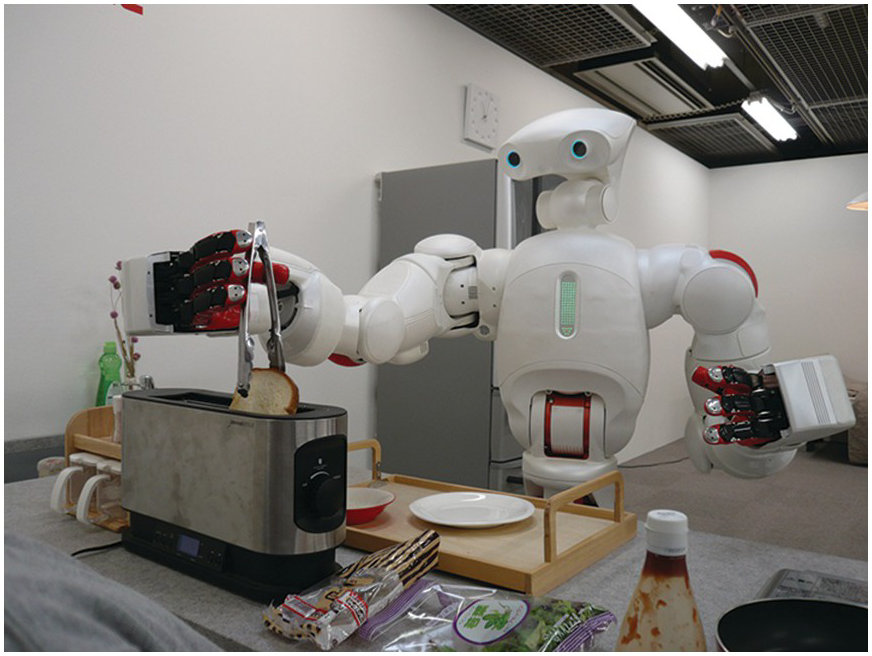

Twendy-One (see Figure 4.3) is a type of “human symbiotic” robot that was developed to address the impending labor shortages in the care for aging societies industry. As such, it must incorporate the typical functions carried out by human caregivers including friendly communication, human safety assistance, and dexterous manipulation. The robot was developed with three core principles in mind: Safety, Dependability, and Dexterity. It possesses a unique combination of dexterity with passivity and a high-power output. This allows it to manipulate objects of various shapes with delicacy by passively absorbing external forces generated by their motion. For example, the robot is gentle yet strong enough to support a human getting out of bed but also has the dexterity to remove a piece of toast from the toaster. Other typical tasks carried out by the robot may include fetching things from the fridge, picking things up from the floor, or various cleaning tasks.

Technology: Twendy-One stands at 1.46 m tall and weighs approximately 111 kg. It has a total of 47 degrees of freedom including rotational and directional movements. Its shell is overlaid in a soft silicone skin and equipped with 241 force sensors to detect contact (accidental or intentional) with a human user. This allows the robot to act with care when working with human users as well as adapt to unforeseen collisions instantly and react accordingly. The omnidirectional wheelbase allows the robot to move quicker and more efficient than the traditional bipedal design would. The robot is also equipped with twelve ultrasonic sensors and a six-axis force sensor to actively detect objects and users and avoid collisions. The robot can operate approximately 15 minutes on a full charge.

Developer: Secom

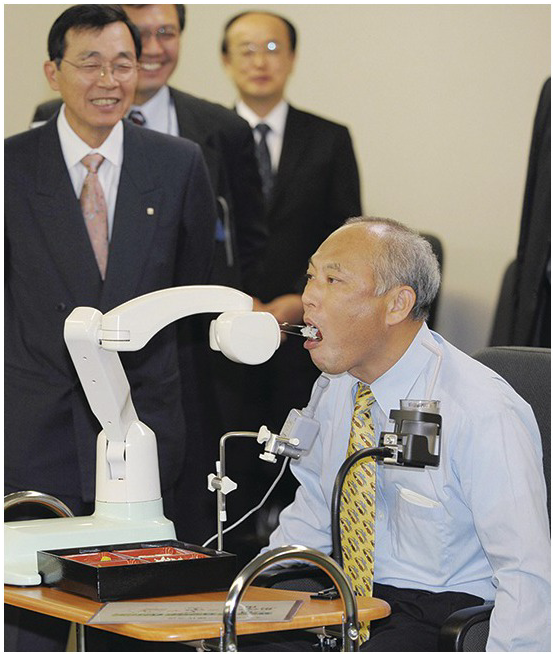

My Spoon (see Figure 4.3) is an assistive eating robot. It provides physical assistance for those who would otherwise require human assistance when eating due to some physical disability. It does still require, however, that the user be able to move their head, eat the food off of the spoon, chew, and swallow while sitting in an upright position. My spoon can be used with nearly all types of everyday foods and does not require special food packets for use. The food, should however, be in bite-size pieces as the robot does not include any cutting mechanisms. My Spoon can also be used for liquids such as soft drinks, coffee, tea, or soup. In contrast to most robots, My Spoon is present not only in Japan, but also across Europe.

Technology: My Spoon is 28 cm(W) × 37 cm(D) × 25cm(H) and weighs about 6 kg. It is designed to lie on the table in front of the user. The robot can be operated in one of three modes: manual, semi-automatic, or fully automatic. In manual mode, the user has maximum flexibility and control via a joystick. Using the joystick, the user selects one of four food compartments, fine-tunes the position of the spoon, instructs the spoon to grasp a bite of food, and directs it toward their mouth. In semi-automatic mode, the user simply selects from which compartment they would like a bite and My Spoon automatically picks up a bite from that compartment and brings it to the users’ mouth. In the fully automatic mode, with the simple press of a button, My Spoon will automatically select the compartment and will bring a bite of the food up to the users’ mouth.

The Maron-1 robot (see Figure 4.4) is a cellular phone–operated system for special patient care, home, and office security. The robot is able to monitor its surroundings, take photos, and relay the photos to the user via their cell phone. The robot can store the layout of the house or office and if so directed (via a cell phone), can navigate to a specified location while avoiding obstacles and maneuvering across slight changes in floor height. It can also be given a specified “patrol route” to follow and actively monitor. Maron-1 is then able to detect any moving objects that enter its field of view (e.g., an intruder). Maron-1 is also equipped with an infrared remote-control capability that allows it to operate various appliances such as air conditioners, televisions, etc.

Technology: Maron-1 is 32 × 36 × 32 cm and weighs about 5 kg. Its drive mechanism of two powered wheels provides its mobility. It has a head with two cameras that can both pan and tilt to capture as much of the surrounding area as possible. It is also equipped with an infrared sensor/emitter and proximity sensor for appliance operation and obstacle detection. It uses Microsoft’s WinCE 3.0 software that allows for its communication with mobile phones. The user interface consists of a touchpad, five menu keys, two function keys, a 10 cm LCD monitor, a speaker, and a microphone. The robot can operate for about 12 hours on a single charge.

Developer: Bandai and Evolution Robotics

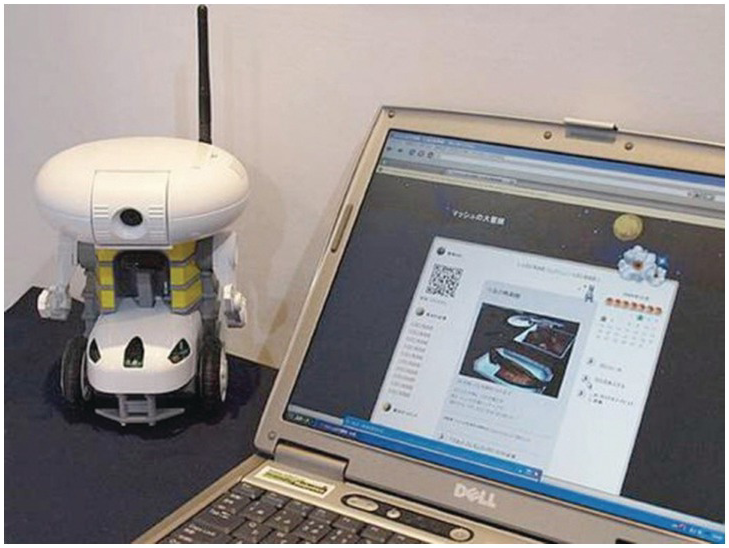

The NetTransorWeb (Figure 4.4) robot from Bandai and Evolution Robotics was designed as a house robot for families and hobbyists alike. It was also given the quirky ability to blog, which distinguishes it from most other robots. It can even respond to comments left on the blog. For example, it can write context-related replies or take another picture of something in the house, perhaps from a different angle. Aside from this unique feature, the primary purpose of the robot is surveillance. The robot monitors the home and can take pictures of anything that moves (i.e., in the case of an intruder) and immediately alert the owner. It can receive instructions over the internet or autonomously navigate its way around the home.

Technology: The NetTransorWeb is about 190 × 160 × 160 mm big and weighs just 980 grams. Its battery allows for roughly 2.5 hours of operation between charges. It is equipped with cameras, microphones, speakers, and motion sensors. It is also easily connected to the internet or home network over a Wi-Fi connection. The ViPR Vision System from Evolution Robotics provides the robot with a level of intelligence such that it can learn its environment, detect and navigate obstacles, and also perform various tasks including the monitoring and reporting of any unexpected changes in its environment. NetTransorWeb can also collect news from the internet (via RSS) and use it to contribute to the blog. Its blogging abilities include uploading, commenting, and answering, often with witty retorts. Unfortunately, the system is not In the market anymore.

Table 4.1 Overview about Robots Introduced in this Chapter

Figure 4.2 (a) Left: EMIEW3.

4.2 Social Interaction

In this section, the introduced technology is focused more on the robotics and techniques supporting the care staff in their work. Thereby, the care staff will get released from the physical work and have more time for the social interaction with the elderly. In Table 4.2, a short overview about the robots introduced in this section is presented.

System Name: Panasonic Life Wall

Developer: Panasonic

The “Life Wall TV” from Panasonic transforms an entire wall into an interactive touch screen television that provides large amounts of information and ubiquitous communication. The result is a wall embedded with a digital interface that allows any member of the family to independently access its entertainment, productivity, or communication features. It includes facial and voice recognition software in order to recognize which member of the family is present and will display the graphical user interface (GUI) specific to that person. The display can be made as small or large as the user would like (within the dimensions of the actual screen). The wall can also track your movement throughout the room and, for example, pause your movie while you get up to answer the phone or even have the smaller display follow you around the room. The touch screen allows for stimulating interaction, whether it is for games, system navigation, or any other task. When not in use, the system can be set to a scenic background of the users’ choice or it can even disguise itself as a more typical wall by displaying, for example, some bookshelves.

Technology: Panasonic’s Life Wall uses a large LCD display. Special facial and voice recognition software allow the system to determine which family member is currently using it and adjust accordingly. The display will show the background, pictures, programs, location of icons and tools, etc., specific to that person. The size, clarity, and interaction ability of the system take photo viewing, videoconferences, and computer games into another dimension. The Life Wall is also equipped with “Wireless HD” abilities to incorporate the internet into any of these aforementioned features.

System Name: Robot Town & Robot Care

Developer: Professor Hasegawa, Kyushu University

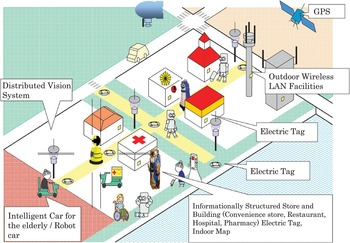

Robot Town (see Figure 4.5) is based on the premise of robots’ limitations in recognizing the environment they are in, reacting to it, and learning from these experiences. This project looks to demonstrate how robots can be more efficient in the future. According to Professor Hasegawa, in order for this to happen, the environments must be structured in a way that they become more recognizable to the robots. In this way, the necessary complexities are removed from the robot itself and transferred into the intelligent systems comprising the surrounding environment. These intelligent systems supply to the robot information such as location – the location of other robots – and direction for action. This approach was further developed into a “Town Management System” (TMS) in which the city is in constant interaction with the robots. The robots are provided with specific relevant information and duties. Thanks to the TMS, it is no longer required that the robot itself be equipped with these high-performance systems but can rather rely on the infrastructure already in place. This allows the robots to be more mobile. At the same time, 1,000 RFID tags dispersed throughout the town provide the robots with real time information on their precise location and state of their surroundings.

Technology: The TMS was designed specifically with senior homes in mind. The system was also tested in this setting. It was used to support the nursing staff in the home. The test setting covered 1700 m2 and was equipped with a video monitoring system.

System Name: Ubiquitous Monitoring System

Developer: Hitachi Laboratories

The concept of “Monitoring Systems” displays not only the instantaneous health parameters (i.e., vital signs), but also provides a continuous monitoring of these parameters as well as considers the future trends and implications of any perceived patterns. If a problem is detected with one of the monitored users, the system can take the necessary actions including alerting a caregiver or emergency services. The system is able to actively track and monitor a variety of users and their various performances in their daily routines in real time. For example, parents could monitor their children as the children make their way to school to ensure that they arrive there safely. The system uses “Peer-to-Peer” (P2P) technology. This type of system can also be used for the tracking of everyday objects such as wallets or backpacks, and should these items be misplaced, can notify the user on their location. During the development of the system, an emphasis was placed on their universal use in the context of demographic change. This system could be used in a variety of different settings including public (e.g., by the police) and private (e.g., in the home setting).

System Name: Secure-Life Electronics

Developer: Various researchers and companies (e.g., NEC, Toshiba, and Hitachi)

The goal of the Center of Excellence (COE) programs is to further develop MST-based technologies. This is important as it allows the improvement of the quality of life of those individuals that may require assistance in carrying out their necessary daily activities. When developing these technologies, it is important to adopt a universal approach in order not to exclude populations from different cultures, backgrounds, economic standing, etc. These specialized technologies are used for social interaction as well as technical and physical infrastructure and therefore positively influence the everyday lives of the aging population and their caregivers. As these technologies often include multi-faceted aspects, developers with specializations covering a wide variety of backgrounds are required. This is true for many technologies presented in this book and facilitates the important synergetic technology transfer between fields.

Technologies included within these systems include:

Sensory systems

Information processing networks

Actuators

Devices with various application cases

Systems integration

Bio-sensory systems

“Right-Brain Computing”

System Name: Input Devices & Health Care

Developer: Various researchers and companies

According to the national “u-Japan” strategy, “ubiquitous” communication and specifically the user interfaces required for these communication devices, will play an increasing role in the future. This is as a result of the significant demographic changes being experienced by many developed countries, specifically in Asia (i.e., Japan) and Europe (i.e., Germany). The interdisciplinary field of microsystems technology is used in various areas of the development of these technologies. Research and experimentation on topics such as sensory systems, actuating systems, bio-MEMS, insect-based robots, etc., is ongoing and will provide significant advancements in this field in the not too distant future. However, a clear focus will remain on different interfaces allowing seamless and intuitive human-machine interaction.

Technologies in this field include:

Wearable Input Devices

Mobile Pointing Shoes

Systems that provide health data

Organic Semiconductor-based strain sensors

System Name: Ubiquitous Communication

Developer: YRP & UID Center, Ken Sakamura

In Tokyo’s business, entertainment district and the most famous shopping quarter in Japan, Ginza, a large-scale experiment involving the use of RFID tags is ongoing. Approximately 10,000 RFID tags are distributed throughout the district and are interconnected with various Bluetooth systems, internet servers, special reading devices, and information systems. The experiment is being made multi-lingual allowing non-native people and businesses to also take part. This large-scale Tokyo Ubiquitous Network project uses an extensive RFID network structure in order to provide users the ability to accurately determine their exact location and easily plan their navigation through the large urban center. Tokyo’s governor, Shintaro Ishihara, inaugurated the experiment at the opening event in Ginza. As each building contains several stores, bars, and clubs, finding the right one can often be difficult. With this new technique, a push of a button will allow you to immediately find your exact location and in which direction you must go to reach your desired destination.

Technology: From the beginning of December 2008, RFID tags were dispersed throughout the entire quarter on buildings, street corners, street lamps, and in bars and shops. Reading devices with a 3.5 inch OLED display allowed users to read the RFID tags and obtain location data and directions. Each RFID tag has a specific code indicating its specific location. This data is wirelessly transmitted over WLAN to a centralized server. The server transmits the desired data (i.e., location, specific directions) back to the user’s reading device. This experiment, managed by the Tokyo Ubiquitous Computing Center, was a joint venture between the Japanese government, the city of Tokyo, the Ministry of Agriculture Infrastructure and Transport (MILT) as well as several other additional companies. Similar experiments are currently ongoing in other Japanese cities.

System Name: Interaction: PARO

Developer: AIST, Takanori Shibata

The positive influence animals have on the elderly or those suffering psychological disabilities is already well known. However, the integration of living animals into these homecare situations is often difficult and impractical. To address this, AIST has developed PARO (see Figure 4.6), an advanced interactive robot able to provide the documented benefits of animal companionship. The robot addresses concerns such as misbehavior, hygiene, noises, and smells that may accompany living animals. PARO has been found to reduce stress in both patients and caregivers and even stimulates interaction between the two. By interacting with the users (speaking with them, listening to them, and responding to their touch), PARO effectively simulates an animal companion and provides direct benefits such as; increased activity in social interaction, including visual, verbal, and physical. Additionally, PARO also ensures increased levels of both motivation and relaxation.

Technology: PARO is equipped with five different types of sensors: tactile, light, audition, temperature, and posture. This allows the robot to actively perceive people and its environment. The tactile sensor allows it to detect touch movements such as stroking or petting while the posture sensor allows it to detect when and in what position it is being held. The audio sensor allows it to detect voices (and the direction of their origin), greetings, and compliments. PARO is also able to learn and remember actions; for example, if it is stroked each time after a certain movement, it will try to repeat that movement in order to be stroked again. These sensors and movements allow the robot to respond to users as if it was alive, even mimicking the voice of a genuine baby seal.

System Name: Interaction and Information: WAKAMARU

The WAKAMARU (see Figure 4.6) robot from Mitsubishi was primarily developed as a companion and helper to the elderly and disabled. It is not, however, used for housework chores such as vacuum cleaning and unloading of the dishwasher. Instead, the robot acts as a sort of secretary for the user. It can move, follow the user around, take notes, and remind them of appointments. It can also provide the user with updated information from the internet, such as the weather forecast, and give advice accordingly such as what types of clothes to wear and to remember their umbrella. It can also be used to ensure that users remember such vital tasks as taking their daily medication.

Technology: The robot stands approximately 1 m in height and weighs 30 kg. It has a flat circular base on which it can roll around with a speed of up to 1 km/h. Facial recognition software allows the robot to recognize and remember up to 10 faces. It is equipped with touch and motion sensors, two video cameras, and four microphones to autonomously interact with its environment. Its ultrasound capabilities also allow it to recognize, detect, and avoid obstacles. Using this knowledge, it creates a plan of all the rooms in the house and is constantly aware of its own location. This allows the robot to know where to go and how to get there. The software is Linux-based and includes a vocabulary of over 10,000 words. This makes the robot fully adequate to converse with human users. The robot is constantly connected to the internet and can readily answer any knowledge-based question. It can recall up to 10 people and remember their daily routines or preferences. It also saves all dates, appointments, etc. it is told and can provide reminders as the time approaches. It can operate for approximately 2 hours before requiring charging, which it can also initiate by itself.

Table 4.2 Overview of the Technology Introduced in this Subsection

| System Name | Developer |

|---|---|

| Panasonic Life Wall | Panasonic |

| Robot Town & Robot Care | Professor Hasegawa, Kyushu University |

| Ubiquitous Monitoring System | Hitachi Laboratories |

| Secure-Life Electronics | Various researchers and companies (e.g., NEC, Toshiba, and Hitachi) |

| Input Devices & Health Care | Various researchers and companies |

| Ubiquitous Communication | YRP & UID Center, Ken Sakamura |

| PARO | AIST, Takanori Shibata |

| WAKAMURA | Mitsubishi |

Figure 4.5 Robot Town & Robot Care.

4.3 Health and Wellness

In this section, technology and robots that support the hygienic and recovery processes of elderly and disabled people are introduced to the reader. Table 4.3 gives an overview of the technologies described here.

System Name: Intelligent Toilet

Developer: Toto and Daiwa House Industry

This fully automatic toilet eliminates the need for users to manually perform tasks such as opening and closing the cover, as well as flushing the toilet in hopes of improving cleanliness. The toilet is also equipped with various health-monitoring functions, such as measuring the sugar levels in urine, blood pressure, body fat, and weight. The built-in urine analyzer is implemented into the toilet in a non-invasive manner, and the device automatically cleans itself after each use. The second version of the toilet is also able to measure the temperature of urine as well as monitor the menstruation cycle of women. The goal of this project is to provide users with the ability to have automated check-ups done in the comfort of their own home and reduce unnecessary trips to the doctor.

Technology: The intelligent toilet (visible in Figure 4.7) collects 5 cm3 of urine from the user for analysis purposes. This sample collector is able to automatically clean itself after each use. The blood pressure monitor is within arm’s reach of the toilet and the scales in front of the sink weigh the user as they wash their hands. The results of the bathroom medical are then transferred to a home network and analyzed in a computer spreadsheet. This allows the users to adjust their lifestyle based on their personal analyzed data. For example, they can alter their diet to reduce their sugar intake or increase daily activity in order to achieve a healthier body mass index. The system can also advise the user if a trip to the actual doctor’s office should be made.

System Name: Bathing Machine HIRB

The “Human In Roll-lo Bathing” (HIRB, visible in Figure 4.7) unit from Sanyo is a compact automatic bathing machine geared toward elderly users. The unit is an improvement over Sanyo’s first attempt at such a product, the “Ultrasonic Bath” which was a concept displayed at the 1970 World Expo in Osaka, Japan. The original concept was nearly 2 meters tall and required a ladder to reach the elevated bathing capsule. The HIRB unit is more practically designed and is geared toward an institutional setting such as care homes for the elderly. The user sits in a chair that rolls backward into the bathtub. The clam-like shell of the bathtub then closes around the user, leaving only their head and shoulders exposed. The wash cycle, consisting of soap and rinse cycles, operates fully automatically. The machine is able to complete everything except the shampooing and washing of hair, which must still be done manually. The HIRB unit assists the nursing staff by automatically carrying out work that can be both time consuming and strenuous on the body while preserving the privacy of the patient.

System Name: Bathing Machine Santelubain 999

Developer: AVANT

The Santelubain 999 allows the user to climb in and rest in a lying position while undergoing an automated bathing experience. In addition to the necessary bathing functions, the system also possesses some beauty and therapeutic abilities. Some of the options in the system include:

Shampoo and washing

Infrared heating and steam treatment

Audio therapy

Aromatherapy

Seaweed wrap

Body lotion

Developer: Fujitsu and NTT DoCoMo

At the Japanese “Wireless Expo 2008,” Fujitsu and NTT DoCoMo presented their “Health Phone” geared toward aging societies. With the F884iES, users are able to check their heart rate wherever they are.

The F884iES can also connect with various Bluetooth or Infrared enabled Tanita products (Tanita holds approximately 50 percent of the Japanese market share for fitness and health scales) such as body fat measurement, blood pressure measurement, and pacemakers. Using these devices, various health data can be saved to a “health calendar” or even sent to the user’s doctor, fitness club, dietician, etc.

Technology: The F884iES is equipped with, among other features, a 2 Megapixel camera, dual color display, a media player, high speed internet connection, and expandable hard drive storage. Special features of the phone include a built-in pacemaker and an integrated pulse measurer as well as a second camera.

Users measure their pulse using a method called pulse oximetry in which a small infrared light is used to measure the pulse through the tip of the finger. In addition to Fujitsu’s F884iES, Sharp has a similar phone (SH706IW) with many similar features.

System Name: Hitachi Genki Chip

Developer: Hitachi

Much time and many resources are used in the complicated and lengthy process of blood analysis. This problem is made worse by aging populations as senior citizens often require these analyses more often. This can result in the needs of other patients, who may be in more immediate danger, often becoming delayed or overlooked.

The Genki Chip from Hitachi makes it possible to summarize important health data, test results, and medical history of a patient in one compact chip. This gives doctors and physicians immediate access to all of the information they need before they can administer any treatment to a patient. It also allows for a reduction in costly or elaborate laboratory analysis as the care giving staff also have access to necessary patient information.

Technology: Mental stress can impose a negative effect on the immune system just as with the endocrine system. For this reason, it is possible to assess the psychological state of a patient using a chemical analysis of the blood. The Genki chip enables this analysis to take place quickly and efficiently as it records and stores the analysis results. Using this rapid analysis technique allows faster intervention by physicians and can allow patients to be treated faster and safer in the event of a sudden change of condition.

System Name: Rehabilitation Suit REALIVE™

Developer: Panasonic, Activelink, Kobe Gakuin University

The REALIVE suit was developed as an assistive rehabilitation system for people that have suffered a stroke and have lost the ability to move upper limbs on one side of their body due to paralysis. The suit works by detecting movement in the unaffected side of the body and assisting the user to mirror that movement on the paralyzed side of the body. This concept is a direct result of medical research that found that “visual feedback of the movement and intensive use of the affected upper limbs can stimulate the cerebral nerves – that go off-line due to cerebrovascular accidents – and improve rehabilitation”. The design focused on developing a suit that was “visible but invisible”, looked and felt good to wear while at the same time maintaining the highest level of user safety. The robotic suit was developed for rehabilitation in the hospital or institutional setting. However, Panasonic hopes to make the suit affordable enough for home use with time.

Technology: The robotic REALIVE suit senses movement in the unaffected side of the body through a series of sensors. The sensors then send the signals to the artificial pneumatic “rubber muscles” wrapped around the affected limbs or muscles on the other side of the body. These “muscles” assist the user in mimicking that movement on the affected side of the body and this movement helps to facilitate their rehabilitation. The sensors and artificial muscles are controlled via compressed air. They are linked to a compressed air unit that also displays the number of times the muscles have been moved. The suit underwent clinical testing at Hyogo Hospital in 2009.

Developer: Institute of Physical and Chemical Research (RIKEN), Tokai Rubber Industries (TRI)

The Robot for Interactive Body Assistance (RIBA, visible in Figure 4.8) is an assistive robot that can help nursing staff lift and transport patients. The robot can assist with the difficult transition transport required to and from the patient’s bed or wheelchair, or also to and from the toilet. At the time of its development, it was claimed to be the first robot that could safely and efficiently lift up or sit down a human being. The robot is limited to a weight capacity of 61 kg (134 lbs). RIBA is the second-generation robot following its predecessor, RI-MAN, which was hampered with concerns over limited safety and performance functionality. Considering the number of times each patient must be lifted and moved each day, RIBA proves to be a valuable asset. The robot not only reduces the strain on caregivers’ backs by completing the lifting actions for them, but also increases the safety of the patients that must be transported.

Technology: RIBA weighs about 180 kg, stands about 1.4 m tall, and moves around on an omnidirectional circular base equipped with omni-wheels. Its ability to safely and efficiently lift and transport humans comes from its strong human-like arms and pinpoint accuracy. The robot is guided by high-accuracy tactile sensors in order to determine in what position the human is in and how best to lift them. It is equipped with special joints and link lengths optimized for lifting humans. This system was developed by pairing RIKEN’s control, sensor, and image processing with TRI’s strength in material and structural design. The robot is able to detect and respond to basic verbal commands from nurses with its two microphones and two cameras. The robot is powered by a DC motor and can operate for one hour per charge.

System Name: Robotic Bed

Developer: Panasonic

The Robotic Bed (depicted in Figure 4.8) from Panasonic was developed to assist with the transition of elderly patients from the wheelchair and into the bed. Similar to other technologies presented in this book, Panasonic’s first attempt at this used strong robotic arms to assist in the lifting of patients. The Robotic bed, however, is a wheelchair-bed combination that negates the need for patient transportation as the wheelchair can transform into a bed and vice versa. This also minimizes strain on care staff as the assistance required for this transformation is minimal. The mattress splits in half – with one half remaining as part of the bed, and the other half forming the shape of the wheelchair. A patient simply needs to shift to one side of the bed, and after a few remote-controlled adjustments, can sit upright in a modified wheelchair.

Technology: The Robotic Bed can be controlled automatically with a simple remote control. It then completes the transformation to bed or wheelchair automatically. The power-assisted tilting of the bed helps to optimally distribute the weight of the user in order to prevent them from the discomfort of slipping during transformation as well as relieving stress that may build up due to extended periods of sitting.

Table 4.3 Overview of the Technologies Introduced in this Chapter

Left: RIBA.

Right: Robotic Bed.

4.4 Information and Learning

In this section, two Information and Learning platforms as shown in Table 4.4 are introduced.

System Name: EZ Touch Remote Control

Developer: Panasonic

The EZ Touch Remote Control (see Figure 4.9) is a product that in coordination with the “u-Japan” Strategy, is aimed at promoting access to various networks, technologies, and therefore services to the general and specifically, aging populations. With this device, Panasonic is looking to modernize the remote control with their new innovative design. Instead of looking down at the remote control in order to locate the correct buttons, this design displays the touch screen on the televisions screen, where the user wants to look anyway. The remote detects whether a user is right-handed or left-handed and adjusts the layout accordingly, placing important buttons within thumb’s reach. The onscreen display can be set to be large and simplistic, specifically tailored to those hesitant to embrace novel technologies or those with a problematic eyesight.

Technology: The EZ Touch Remote Control is equipped with two touchpads. The dual touchpads allow for multi-touch user manipulation which allows for a variety of gestures that can control aspects such as zooming and scrolling. The remote control still has physical buttons at the center for quick control of volume, channels, and power.

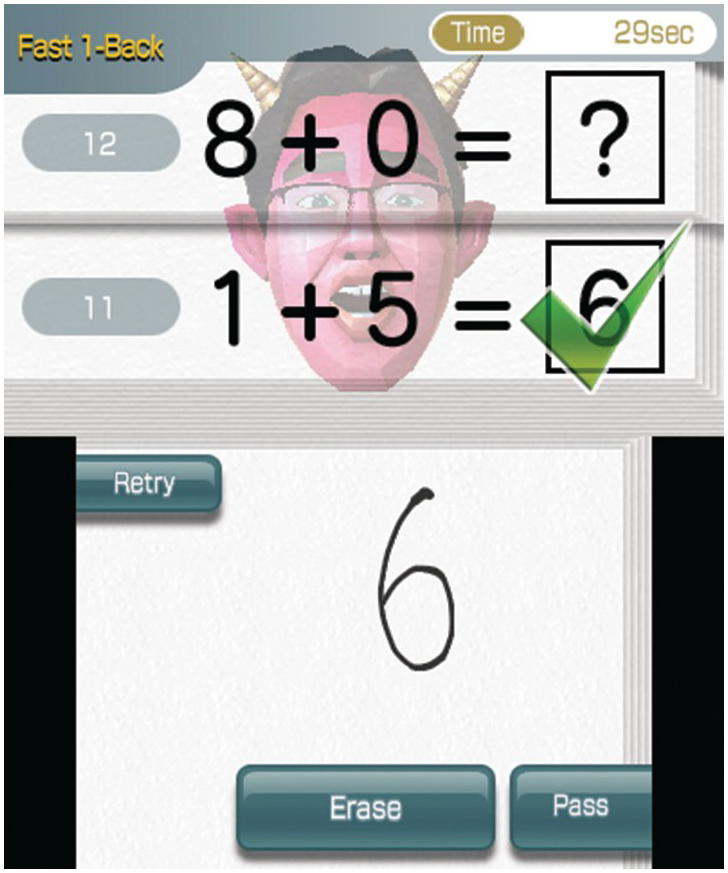

System Name: Dr. Kawashima’s Brain Training

Developer: Nintendo, Dr. Ryuta Kawashima

Dr. Kawashima’s Brain Training (shown in Figure 4.9), also known as Brain Age: “Train Your Brain in Minutes a Day!”, is a video game for the Nintendo DS that uses puzzles and various types of problems to stimulate the brain. Although the game has not been scientifically tested and validated, it is ‘inspired’ by Dr. Kawashima’s research in the field of neuroscience. It allows users that may suffer from decreased brain activity from age, diet, sickness, poor sleep, etc., to evaluate the state of their brain and ‘train’ it back to health through daily exercising. After completing any puzzle, the user is informed of the ‘age’ of their brain based on how fast they were able to complete it. They are also given tips for improvement. In total, there are five modes of play: Brain Age Check, Training, Quick Play, Download, and Sudoku. Each mode of play includes a variety of different games, puzzles, and tasks for the user to choose from.

Technology: The game is designed for daily usage with only a few minutes each day required to improve your brain and lower your ‘Brain Age’. Daily tasks include mental arithmetic, counting, speed reading, or syllable counting. The Nintendo DS system is used as a sort of digital book where one side is used for display and the other side for user input, depending on whether the use is left or right-handed. It has a touchscreen and microphone to capture user input. Although Nintendo stresses that they are in the entertainment business only and make no claims about the games effectiveness on improving brain activity, the game is recommended by many neurologists to patients suffering from dementia or Alzheimer’s.

Table 4.4 Overview of the Technology Introduced in this Chapter

| System Name | Developer |

|---|---|

| EZ Touch Remote Control | Panasonic |

| Dr. Kawashima’s Brain Training | Nintendo, Dr. Ryuta Kawashima |

4.5 Working

In this section, information and communication system which support either the physical, or mental work e.g., by finding the correct path or reducing the force on the muscles by wearable robots are presented. In Table 4.5, an overview about the technology introduced in this section is given.

System Name: Roppongi Hills R-clicks

Developer: NTT DoCoMo Inc.

NTT DoCoMo Inc. began the development of their Roppongi Hills R-clicks system in 2003 (see Figure 4.10). R-clicks was developed as an information service to provide users with detailed information regarding their environment via their cell phones. The system works using RFID tags. It was first tested with the Mori Building Co. Ltd. complex in Roppongi Hills, Tokyo. The first participants were each given an RFID tag reader and from this, the system could determine their exact location. More than 4500 RFID tags were dispersed throughout the area. The system could then send the desired information to the user’s cell phone as they move through the various complexes, stores, hotels, and entertainment venues of the area. The information was accessed on their cell phone via iMode capabilities. In some cases, the cell phone itself could even be modified into a scanner and eliminate the need for a secondary scanning device.

Technology: There are three detailed scenarios on how a user might benefit from R-clicks:

1. Koko Dake (Environment Information)

While standing in any of the 20 or more zones of Roppongi Hills, users can scan an RFID tag and immediately receive detailed information generated specifically for them regarding their environment.

2. Mite Toru (Visit and Listen)

While standing in front of a display board, the user can receive information regarding specific products, services, etc., related to their current environment

3. Buratto (Tour)

While moving around, the user can receive current information regarding their environment, including when they are entering into a new zone and what attractions may be within this zone.

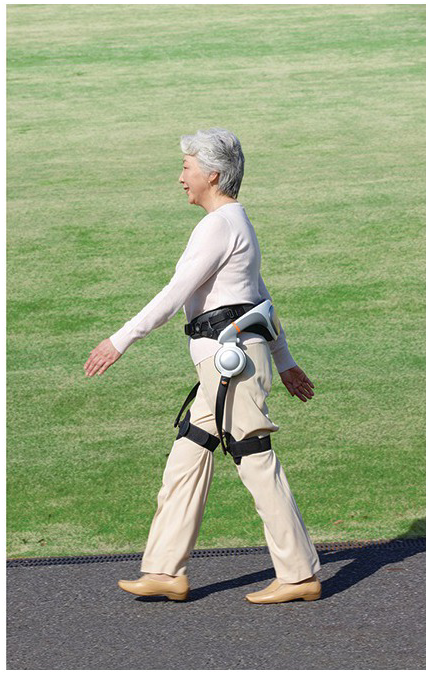

System Name: Stride Management Assist and Bodyweight Support Assist

Developer: Honda

Honda began their research into electronic assistive walking devices in 1999 by initiating research, various studies of human walking, and the development of many different conceptual variations. Much of the research was devoted to the development of Honda’s signature humanoid robot, ASIMO, but inadvertently led to many different spinoff stand-alone technologies with real-world practical use cases. Two of these by-products are the ‘Stride Management Assist’ and the ‘Bodyweight Support Assist’. Both systems are ‘worn’ by the user providing joint and muscle support where required. The Stride Management Assist uses a motor to assist the user to lift each leg at the thigh while walking. It is designed for people who may have weakened leg muscles but are still able to walk and lengthens their stride while regulating their walking pace. The Bodyweight Support Assist allows users to walk while carrying a ‘lighter’ body. The system supports the user’s upper body in order to reduce stress on the legs while walking, going up or down the stairs, and in semi-crouching positions.

Technology: Both systems (see Figure 4.10) use a series of sensors to detect the motions of the user’s walking. An integrated computer uses this data to control the light metal braces and move seamlessly with the user. The ‘Stride Management Assist’ uses a compact DC motor and the system is relatively light at only 2.8 kg, including the lithium-ion batteries. The batteries have a run-time of approximately 2 hours at a walking pace of 4.5 km/h. The ‘Bodyweight Support Assist’ uses sensors in the shoes to determine the desired movements of both the left and right legs. The system supports the user and reduces the load on their legs while walking, climbing, or even just standing. The system weighs 6.5 kg and also uses lithium-ion batteries that give it a run-time of approximately 2 hours.

System Name: Brain-Machine Interface

Developer: Honda, Toyota, ART, and Shimadzu Corp.

The “Brain-Machine Interface” (BMI, visible Figure 4.10) is the first example in the futuristic field of ‘thought-controlled’ robots. The system was developed at the BSI-Toyota Collaboration Center (BTCC), which was established by the Japanese government and their industrial partners. The system processes the users’ brain activity, which can then direct the robot to move left, right, or straight ahead. There is no need for any implants or invasive surgery as the system utilizes a series of sensors placed on the users’ skull. An emergency stop feature has been implemented by means of the user ‘puffing out their cheek’. Practically, this can be applied to control wheelchair movements regardless of someone’s physical inabilities.

Technology: The technology of using brain activity to control a machine is not entirely new, however, Honda and Toyota have found a way to reduce the reaction time of the system down to a mere thousandth of a second, a vast improvement over preceding systems. It accomplishes this by measuring the electrical activity in the user’s brain via electroencephalography (EEG) data gathered from a series of five sensors placed over the motor-movement areas of the brain. These electrical impulses from the user’s brain are picked up and analyzed by an on-board laptop, which then translates them to movement. The system also learns the ‘thinking’ patterns of the user and can adjust in order to improve accuracy to levels of 9 percent.

Table 4.5 Overview of the Technologies Introduced in this Subsection

| System Name | Developer |

|---|---|

| Roppongi Hills R-clicks | NTT DoCoMo Inc. |

| Stride Management Assist and Bodyweight Support Assist | Honda |

| Brain-Machine Interface: Honda, Toyota | Honda, Toyota, ART, and Shimadzu Corp. |

4.6 Mobility

Mobility means life quality, especially for the diseased and fragile people, e.g., the elderly. Today’s technology allows us to travel fast over kilometers by train, car, or airplane. However, the large distances are not critical, the short distances are the main problem. Supporting devices like rolators can help, but have their limits (e.g., at stairs). Therefore, researchers investigated the use of wearable robots and new mobility concepts, in order to keep the fragile and elderly mobile and thereby independent. In this subsection, the mobility aids of the Table 4.6 are introduced to the reader.

System Name: HAL-5 Enhanced Mobility Suit

Developer: Cyberdyne, Tsukauba University, Prof. Sankai’s Team, Daiwa House

The Hybrid Assistive Limb (HAL-5) (see Figure 4.11) is a robotic suit that is worn in order to enhance the user’s strength and mobility. The suit was designed to assist elderly users with daily activities, but additional applications of the suit include assistive rehabilitation, assistance to paralyzed individuals by enabling mobility, assistance to nurses and factory workers in strenuous activities, and even assistance with rescue and clean-up efforts following a natural disaster. Interested in robots from a young age, Professor Sankai began developing HAL immediately after receiving his Ph.D. in robotics in 1990. HAL-5 is the fifth iteration of the system and is unique in its completeness and already enjoys widespread use.

Technology: When a person wishes to move a certain part of their body, nerve signals are sent from the brain to that part of the body. When this happens, small bio signals can actually be detected on the skin at that part of the body the person wishes to move. The suit uses electrodes mounted on the user’s skin in order to analyse and detect these muscle movements. The system then uses these signals to compliment the user’s motion, enhancing the user’s strength by up to five times what it normally would be. The suit is powered by a 100-volt battery pack that is mounted at the user’s waist. It includes both a user-activated “voluntary control system” and a “robotic autonomous control system” for motion support.

System Name: WL-16R3 Robot Legs / Walking Wheelchair

Developer: Waseda University, Prof. Takanishi’s Team

The WL-16R3 (see Figure 4.11), also known as the ‘Walking Wheelchair’, was designed as a mobility solution for those that are unable to walk. The design team wanted to address the shortcomings of alternative solutions (e.g., use of Segway still requiring user to stand, or limiting terrain such as stairs for wheelchairs). The result was a bipedal robot that the user sits atop and controls using a pair of joysticks, one for each hand. This allows the physically disabled users a more flexible range of mobility from a sitting position. The user is even able to safely navigate stairs while sitting atop the WL-16R3.

Technology: The WL-16R3 stands at approximately 122 cm (4 feet) tall and weighs about 68 kg (150 lbs). It is controlled by a single user via two joysticks. In addition to navigating uneven terrain, the robot is also capable of vertical movements, for example, in the ascent or descent of stairs. The legs of the robot take the form of telescoping poles, which allow for the required vertical movements. The poles are fixed to flat plates that act as the ‘feet’ of the system. The robot is battery controlled and uses pneumatic cylinders for control of the legs. It uses a total of 12 actuators to move forwards, backward, or sideways and can accommodate a user weight of up to 60 kg (130 lbs). The robot can even adjust to the users shifting in the chair to ensure a smooth and comfortable ride. The typical walking stride of the robot is approximately 30 cm but the robot can stretch its legs up to 136 cm apart.

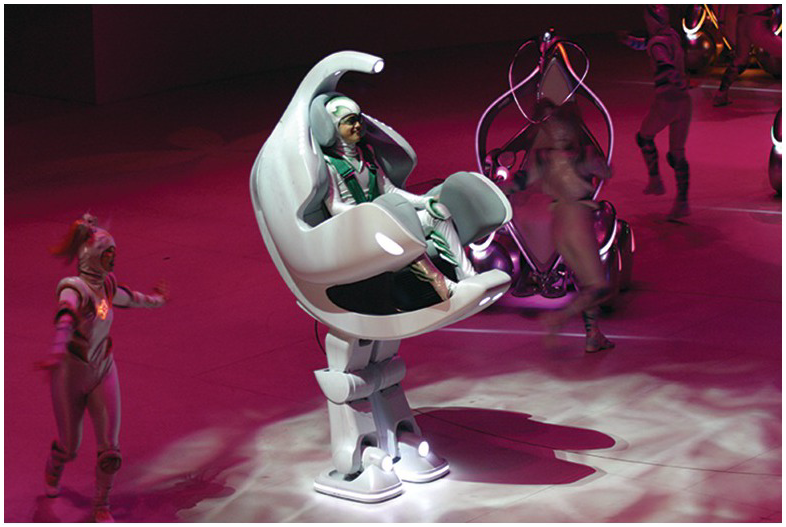

System Name: i-foot / Toyota Mobility Suit

Developer: Toyota

Toyota, widely known for their automotive business, is constantly planning for future business streams in hopes of leading the next product revolution. One of these views involves humans residing, working, and living within immense housing complexes and roofed metropolises. In this scenario, due to spatial and environmental constraints, the traditional automobile may no longer exist in its current form. For this reason, Toyota has developed a series of “i-units” to address the need for futuristic transportation. The basic concept behind the “i-foot” (see Figure 4.12), or Toyota Mobility Suit, is the extension of human ability. These units act as personal transportation vehicles and work together with other household robots in order to optimize usability. In this context, the system can be seen as especially beneficial for the elderly and/or physically disabled. The connection is clear, for someone who spends their entire life being carried around in a personal vehicle, even a trip to the refrigerator proves exhausting.

Technology: The inspiration behind Toyota’s “i-foot” comes from their interpretation of bird legs. These legs are mounted on the chassis, and together, provide the movement for the system. The egg-shaped body consists of a bowl-shaped seat that lights up and has steering modules for the operation of the robots. Steering is made possible through the use of an instrument panel and joystick on the right side of the unit. The entire unit stands at approximately 2.36 m tall and weighs about 200 kg. It can accommodate passengers up to a weight of 60 kg and has a maximum speed of roughly 1.35 km/h.

System Name: i-Real

Developer: Toyota

With the “i-Real”, Toyota presents another approach in the direction of ecological and sustainable mobility for future transportation requirements. Despite the futuristic design, Toyota is convinced that single-user vehicles are an integral part of an emission free future for transportation. The concept of the “i-Real” (see Figure 4.12) is based on previously developed mobility studies of single passenger vehicles completed by Toyota (namely the “i-unit” and the “i-Swing”). So far, the “i-Real” is not ready for mass production, but Toyota’s support for individual transportation for the transportation of the future is clear. The “i-Real” was developed for the urban environment, tailored toward the transportation of a single adult. The device itself is compact and its size is roughly equivalent to that of a grown adult. The position and orientation of the seat promotes the togetherness of the driver and the vehicle. The control of the “i-Real” is intuitive and done through fields of sensors implemented in the vehicle’s armrests.

Technology: The “i-Real” is driven by an electromotor that drives both front tires. Its battery can be recharged via a standard electricity plug and the vehicle can travel for approximately 30 km on a single charge. With two optimized gears, the “i-Real” is a technical masterpiece. The vehicle is versatile as it can safely operate in pedestrian zones with a cruising speed of 6 km/h but also keep up with downtown traffic at a speed of 30 km/h. Depending on its use (and consequent intended speed), the position of the vehicle’ wheels can vary. In the slower mode, the back tire is pulled in and the vehicle travels in a more upright position. In this way, the driver is more at “eyelevel” with other pedestrians. For the security of the driver and nearby pedestrians, the “i-Real” is constantly monitoring its immediate environment and warns the driver of possible collisions using both audio and optical warnings. In the fast mode, the back tire is pushed further out and the center of gravity of the vehicle is lowered, allowing the driver to sit in a more ergonomically position.

Developer: Toyota

The “i-Swing” (see Figure 4.13) follows other pod-like single-user transportation vehicles from Toyota and is their fourth instalment in developments of this nature. With its compact and flexible body, the “i-Swing” perfectly adapts to its individual use space. The “i-Swing” can be adjusted to fit the user’s personal needs and provide them with nearly unlimited mobility. The “i-Swing” is actually equipped with two operating systems: one geared toward slower and more sensitive environments and the other for faster paced environments. For the first use environment, the system uses two-wheel drive that is very dependable and safe and allows for accurate control and navigation through pedestrian zones. For the second use environment, the system takes advantage of its three-wheel drive in order to provide high performance and agility in regular street traffic. With its multiple ultramodern communication functions, the “i-Swing” mobility concept goes far beyond the possibilities of conventional vehicles.

Technology: The form of the “i-Swing” does not resemble that of a traditional vehicle so much as it almost suggests a new lifeform. Its futuristic and minimalistic body is comprised of anti-shock polyurethane. Through its integrated intelligence system, the “i-Swing” can also remain in constant communication with its driver, even appearing like a virtual person on a pop-up display at times. Over time, the vehicle learns the behavioral mannerisms of the driver and with this knowledge, can produce desired information and make adjustments accordingly. The material enclosure of the outer portion of the vehicle is interchangeable, allowing for users to put their personal touch on their vehicle. The front door and rear triangular portion of the vehicle are equipped with color LED matrices that allow displays of pictures, messages, or short videos, depending on the user’s wish.

System Name: Wheelchair Robot

Developer: Toyota

The concept for the “Wheelchair Robot” (see Figure 4.13) from Toyota is to enable a comfortable way for users to travel short distances. The device is not only intended for transportation between one’s workplace and home, but also for traveling distances within the workplace or within the home. This unit combines individual autonomous mobility with transport ability to replace the requirement of using one’s own legs when getting around. Additionally, the “Wheelchair Robot” has a number of accessory functions. One such function is the ability for the unit to travel adjacent to its “user” and be used as a type of wagon to transport items with heavy loads.

Technology: The underlying concept of the “Wheelchair Robot” is that it is very compact and light compared with other units of similar function. This allows the unit to be easily transported and used across various locations. The device stands at only about 1 m high and this height is increased to only 1.1 m when compacted for transport. It weighs about 150 kg. The device can travel up to a speed of 6.0 km/h and can last for approximately 20 km on a single charge.

System Name: RCAST Group: Space Technology for Rehabilitation Science

Developer: JAXA Institute

The JAXA Institute is attempting to transfer their technical knowhow in the spaceflight industry into the field of nursing care. As they are among those that best understand the need for lightweight construction material and kinematics, they hope to use this knowledge to develop a solution that reduces the strain of heavy lifting often required by nursing staff. The use of a GPS controlled wheelchair with an integrated navigation system as well as the use of a special matrix display for the blind are just a few examples of the effectiveness of their approach.

Technology: Due to the strains of heavy lifting, lightweight equipment, materials, and kinematics are especially important in the field of nursing and caregiving. This transportation system was developed specifically for this domain. The system enables the transportation of patients from one bed to another without large efforts from staff.

The specially developed Braille display enables blind users to control and steer the system. The display functions similarly to conventional touch panels but instead of two-dimensional graphics, it is equipped with the ability to produce surfaces that can be touched and understood by those unable to see them. The specially constructed lightweight wheelchair (“Dream Carry”) is comprised mostly of modern plastics and synthetic materials. This allows it to maintain a weight of only 5.5 kg. The chair can also be folded into two half-suitcase shells and be transported by simply carrying around the suitcase. This allows for easy and comfortable transportation to almost anywhere. Source: RCAST Group, Prof. S. Fukushima, Tokyo University, JAXA Japan Aerospace Exploration Agency.

System Name: i-Road (Personal Mobility)

Developer: Toyota

Driving through Japanese metropolitan areas becomes problematic with the high prices for parking spots and the inability to even find such spots in the first place. Without parking spaces, the option of driving becomes impractical. Special exceptions have been made by the Japanese government for tiny city vehicles or “Kei-Cars”. As such, the demand for these tiny two-seated vehicles has drastically increased. Toyota unveiled the i-Road (or PersonalMobility, PM, see Figure 4.14) at the Tokyo motor show to address this increase in demand for “Kei-Cars”. The vehicle is enclosed with an egg-like shell that fits snugly around the single user. Toyota goes so far as to call their 1.75 m tall i-Road “the first wearable vehicle in the world”.

Technology: The rolling egg-shaped i-Real is able to change its shape based on its specific use case. The driver cabin remains upright or tilts downward depending on where the vehicle is being driven. In slower, city traffic, the cabin remains upright and provides the user a good view of their entire surroundings. During faster highway use, the cabin reclines in order to become more aerodynamic. For entering and exiting the vehicle, the cabin is in the upright position to allow the user easy access and comfortable transitions. The entire front door also opens upwards to enable unhindered access. After the door opens, the seat moves outwards to allow easier entering of the vehicle. Steering of the vehicle is controlled by two joysticks adjacent to the seat. Only the front wheels are used for steering and actually pivot to allow turning on the spot. The i-Road is also able to identify and communicate with other i-Real vehicles, even changing its color as it does so. This feature also enables the vehicles to form a sort of marching line where one vehicle is in charge of leading, and the other simply follow in an auto piloted manner.

System Name: Toyota Mobility Assistance Program

Developer: Toyota

The motivation behind this recently developed approach is the notion that senior and/or disabled people should not be discriminated against in Japan. With this goal in mind, Toyota is seeking to offer specific individual transportation solutions based on the disabilities of customers. Their product portfolio ranges from simple machines such as a hand crank to fully automated wheel chair integration into vehicles. With this approach, they hope to respect the different vehicle categories (and often, financial backgrounds) that are present among various customers. The themes of “Universal Design” and “Mass Customization” are present in all technical requirements of the various products available.

Technology: The “Transport Wheelchair” (visible in Figure 4.14) allows users the possibility to enter and exit a vehicle without the need for lifting themselves from their chair. While the user remains seated, the undercarriage of the wheelchair is pushed over rails and loaded onto an adjustable seat. The tillable and adjustable seat is very advantageous for those with problems when entering and exiting vehicles. The seat is able to be maneuvered outside the limits of the vehicle in order to provide easy access. The seat is adjustable in both height and angle to allow the “sideways” loading of users from wheelchairs. The pivoting of the seat can be controlled either manually or automatically and the lowering or raising of the seat is accomplished with the help of an electric motor. A wireless remote control is optional. The final position of the seat is programmable for ease of repeated use.

System Name: Toyota RIN Interior

Developer: Toyota

The gently opening sliding doors of the Toyota RIN Interior (see Figure 4.14) remind one of the doors of a Japanese teahouse. The goal of its development was for the driver to feel a sense of relaxation as if they were actually entering the said teahouse. The warmth of the heated seat, the posture in which it encourages, and its upright position are also used to resemble those of the traditional Japanese tea ceremony. In fact, the term mediation is even found in its name, RiN, representative of the Chinese characters for “upright position”. For both the interior and exterior of the vehicle, developers were inspired by the Yakusugi Tree, a Japanese Cyprus. This was intended to keep users in tune with nature and to establish a healthy balance between body and soul. To continue with the focus on environment, an oxygen regulator and air humidifier help the vehicle to maintain an optimal temperature, oxygen level, and humidity level.

Technology: The RiN abstains from the classical steering wheel in favor of a steering control resembling that of an airplane. The vehicle is also equipped with a series of sensors that record vital signs data in a similar way as an electrocardiogram (ECG). The dashboard also features a screen that displays images that are intended to influence the mood of the driver in a positive manner. These gentle changes of images subconsciously relax the user. The green tinted glass windshield of the vehicle decreases the penetration of harmful UV rays while allowing lots of natural light into the vehicle. The lower windows and doors of the vehicle are also transparent in order to improve the driver’s overall view of their surroundings. The 3.25 m long, 1.69 m wide, and 1.65 m high RiN is also considerate of other drivers on the road. The vehicle has a special function that allows the strategic distribution of light from the headlights away from oncoming traffic in order to temporarily avoid blinding them.

System Name: Toyota Sustainable Mobility WINGLET

Developer: Toyota

The futuristic Toyota WINGLET (see Figure 4.15) requires no special driving abilities from its users. It remains balanced between its two wheels and is useful in everyday situations. The vehicle is compact, yet strong, and can be charged from any conventional electrical outlet. It presents new opportunities in business life as workers can expand their mobility, sense of their surroundings, and load carrying capacity. The WINGLET accomplishes these functions through a variety of modern technologies. The machine is intended to be used at Japanese airports and will later be tested in large urban centers and shopping malls in order to gauge reactions from various users.

Technology: The Toyota WINGLET is available in three different versions, however, the platform of the vehicle is constant across all three versions. The difference appears in the height of the vehicle shaft. The Model L has a shaft that reaches approximately waist high or 1.13 m, the Model M to the knee or 0.68 m, and the Model S to the calf or 0.46 m. The two larger versions weigh approximately 12.3 kg with the smaller Model S weighing in at just 10 kg. The different versions are intended to accommodate different use cases. Toyota deems the vehicle suitable for users from “practical to those attempting to ride hands free for excitement”. The maximum speed of the vehicle is about 6 km/h, yet the vehicle boasts a turning radius of 0 m allowing it to be very agile. The maximum range of the vehicle is between 5 and 10 kms depending on the use case, and recharging is completed in just one hour.

System Name: AIST Intelligent Wheelchair

Developer: AIST, Yutaka Satoh

The development of the AIST Intelligent Wheelchair (visible in Figure 4.15) is aimed at enhancing the quality of life for older people with higher needs in terms of their social environment. This technology offers those that are bound to a wheelchair the opportunity to move more independently. Due to this increased assistance with mobility, there is also an increase in potential dangers for the user in terms of accidents with other drivers. For this reason, this robot was developed in order to monitor the surroundings of the user and avoid these risks.

Technology: The AIST features a stereo omnidirectional system (developed by Yataka Satoh in the JST Human and Object Interaction Processing Project) that is able to produce, in real time, moving colorful images with the help of 36 mounted cameras. The system uses this to create a 3-dimensional model of its immediate surroundings. The cameras are organized in such a way that there are no blind spots when using this technology. The logical positioning of the system over the wheelchair provides it with the best possible location to accurately and efficiently capture all of its surroundings. With this information, the wheelchair is able to pre-emptively brake in the event that it senses danger or possible collisions.

Developer: Suzuki

The Suzuki SSC (Suzuki Sharing Coach, shown in Figure 4.16) is a “minicar-based mobility unit” intended to be paired with the Suzuki PIXY. The PIXY is a single-user pod-like scooter based on the concept of “friendly mobility”. It is intended for low speed operation on pedestrian footpaths and inside of buildings. The SSC is paired with the PIXY in order to form a more traditional looking automobile for higher speed operation among regular street traffic. One SSC is capable of holding a maximum of two PIXY vehicles. The PIXY can also be coupled with a sports-car version (SSF) and a boat unit (SSJ). The project aims at achieving a sustainable mobility system in accordance with the Japanese Ministry of Economy and Trade as well as the Industry’s Next Generation Vehicle and Fuel initiative, aimed at realizing an environmentally friendly “people-centred motorized society.”

System Name: Universal Vehicle RODEM

Developer: TMSUK Co.

The “universal vehicle” was developed with the elderly and physically disabled in mind as it was designed in such a way that users can enter and exit the vehicle with ease due to limited constraints. This allows for use of the vehicle without the need for care staff to assist in the boarding or alighting of the vehicle. It is equipped with many special features and aims at providing users with an opportunity to freely travel wherever they want to go.

Technology: The “Universal Vehicle RODEM” is also equipped with some special features. It has a built-in GPS navigation system for traversing through unknown parts of the city. It is also equipped with an automatic obstacle evasion mechanism to avoid collisions. An automatic slope correction system, autonomous navigation function, and voice recognition also assist in its use. The vehicle also features a vital sign monitoring system, a feature especially valuable for senior users. The vehicle is 1220 x 690 x 1170 mm in size. It requires a charging time of approximately 4 hours and has a maximum speed of 6.0 km/h.

System Name: City-Car PIVO

Developer: Nissan, Takashi Murakimi, Cyberdyne, Tsukuba University

The PIVO is a futuristic concept car from Nissan and is characterized by its unique pivoting cabin. The car is fully electric and features excellent visibility from all sides of the vehicle. This visibility is further enhanced by Nissan’s “Around View” technology, eliminating various blind spots around the car. The car is also very compact and easy to maneuver. In addition to the pivoting cabin, the second iteration of the PIVO also features pivoting wheels. This provides drivers with much more flexibility and makes parallel parking, and even reversing altogether, a thing of the past. As the wheels can pivot 90 degrees, users can simply drive sideways in and out of a tight parking spot. Despite an overall length of only 2.7 m, the car comfortably fits three passengers and is easy to get into and out of thanks to its tall, power sliding doors.

Technology: The cabin of the car is able to rotate an entire 360 degrees, allowing the driver to comfortably face any direction required for efficient operation. For example, instead of reversing, the driver can simply rotate the cabin and drive “forwards” in the opposite direction. The pivoting function of the wheels is powered by an in-wheel electric motor for each individual wheel, with the charge coming from lithium-ion batteries. The PIVO is itself powered by Nissan’s unique Supermotor, which results in zero emissions. Each axel is powered by its own Supermotor, allowing them to be controlled independently for an even distribution of torque to all four wheels. Nissan’s unique “Drive by Wire” technologies allow control of various functions of the car to occur electronically, rather than the conventional mechanical method. In addition to the rotation of the cabin, the “Drive by Wire” approach allows electronic control of the steering, braking, shifting, and signaling. This approach removes the need for mechanical linkages between the cabin and the undercarriage, thus enabling the possibility of a rotating cabin. The PIVO is also equipped with a robotic agent that assists in navigation, control of various features in the car, and even finding nearby parking spaces.

Table 4.6 Overview about the Mobility Aids Introduced in this Subsection

| System Name | Developer |

|---|---|

| HAL-5 Enhanced Mobility Suit | Cyberdyne, Tsukauba University, Prof. Sankai’s Team, Daiwa House |

| WL-16R3 Robot Legs / Walking Wheelchair | Waseda University, Prof. Takanishi’s Team |

| i-foot / Toyota Mobility Suit | Toyota |

| i-Real | Toyota |

| i-Swing | Toyota |

| Wheelchair Robot | Toyota |

| RCAST Group: Space Technology for Rehabilitation Science | JAXA Institute |

| i-Road (Personal Mobility) | Toyota |

| Toyota Mobility Assistance Program | Toyota |

| Toyota RIN Interior | Toyota |

| Toyota Sustainable Mobility WINGLET | Toyota |

| AIST Intelligent Wheelchair | AIST, Yutaka Satoh |

| Suzuki SSC | Suzuki |

| Universal Vehicle RODEM | VEDA International R&D Center, TMSUK Co. |

| City-Car PIVO | Nissan, Takashi Murakimi, Cyberdyne, Tsukuba University |

Figure 4.16 Suzuki SSC.

Left: Universal Vehicle RODEM.

Right: City-Car PIVO.