QUALITY ASSESSMENTS IN HEALTH CARE ENVIRONMENTS

Introduction

The field of health care architecture is replete with information about evidence-based design, best practice design strategies, and lessons learned from the field for various environments. Yet few of these latest studies have been replicated, and only a limited number are performed within the context of the complete hospital environment or a broad network of health care facilities. Most evaluation projects to date on health care facilities have utilized different processes, metrics, and sets of tools, hampering the transferability of findings and compromising the development of a standardized facility database that spans multiple facilities or departments.

In response, this chapter will discuss the development and pilot testing of a new structured post-occupancy evaluation (POE) approach to conduct quality assessments of medical facilities for the military health system (MHS) in their quest to build and operate world-class medical facilities. The MHS desires to build an assessment program to inform the development of evidence-based planning and design guidelines for health care projects. In particular a POE approach, framework, methodology, metrics, and data collection tools are briefly presented, offering insight on how to conduct facility-wide assessments and focused assessments within inpatient and outpatient clinical departments. Finally, lessons learned from conducting two subsequent pilot studies testing the POE methodology will be presented.

Quality assessments of health care facilities: a case study of the military health system

A series of articles that first appeared in the Washington Post reported the rundown conditions and substandard medical treatment afforded to soldiers returning from America’s wars. The recent scandal concerning the hospital system of the US Department of Veterans’ Affairs is a case in point. Public exposure of the dilapidated conditions at MHS’s Walter Reed Army Medical Center spurred interest and action toward change. Consequently, in 2008 the Health Systems Advisory Subcommittee of the National Capital Region Base Realignment and Closure Act (BRAC) was charged with reviewing the floor plans for two newly designed MHS hospitals to determine whether these facilities were designed and constructed to be world-class (WC) medical facilities. The independent review of these floor plans led to a document entitled “Achieving World-Class.” In this report, a definition of world-class facilities was presented as: “The delivery of healthcare in a state-of-the-art facility that consistently delivers superior, high quality care, translating into optimal treatment outcomes at a reasonable cost to the patient and society” (Kizer et al. 2009). With a mandate in place to create and operate world-class facilities, the MHS then initiated the development of a facility evaluation program and reached out for help from Clemson University, NXT, Noblis, and the National Institute of Building Sciences (NIBS).

This chapter highlights lessons learned from a multi-year effort aimed at developing a standardized POE process and toolkit for medical facilities utilizing complementary subjective and objective quality indicators. Compared to previous environmental evaluations, quality is assessed along a set of indicators derived from the “world-class criteria.” A case study approach was employed for the POE with two main assessment approaches: a broad-brush facility-wide assessment and focused departmental-level assessments. First, the facility-wide inquiry is a limited, broad study of the overall hospital level including all departments. Its strength is that it permits the evaluators to study departmental interrelationships to understand how the facility operates as a whole and the effectiveness of linkages across different departments. Second, at the department level, in-depth inquiries are conducted permitting the evaluators to study select departments in a comprehensive manner. Their strength is that the unit is understood at a more granular level and targeted design recommendations can be more aptly devised. Prior to starting the POE, inpatient and outpatient clinical units were identified to study. For example, the MHS selected three outpatient units including family medicine, surgery, and emergency services; and four inpatient units including general medical surgical, behavioral health, and obstetrics. The POE methodology and toolkit were developed and tested sequentially in two pilot studies leading to a refined process and set of metrics and tools. It is believed that findings from POEs may lead to ongoing improvements in the MHS facility guidance and design criteria, such as space planning criteria and room templates, as well as providing opportunities to seek best practices in facility design.

Employing a quality improvement approach to conduct health care facility assessments

An important consideration for the project was to determine an approach to guide the evaluation process and toolkit. Based on the objectives of the evaluation, the short timeline, and lessons learned from the first pilot, the team pursued a quality improvement (QI) approach rather than a scientific research approach for the second pilot. Upon review of the application, a committee determined that the project did not qualify as research according to the federal regulations and therefore did not require Institutional Review Board (IRB) approval. Instead, the POE project was deemed a QI project, a study that aims “to assess or improve an existing internal process, or local program/system or to improve performance as judged by established/accepted standards.”

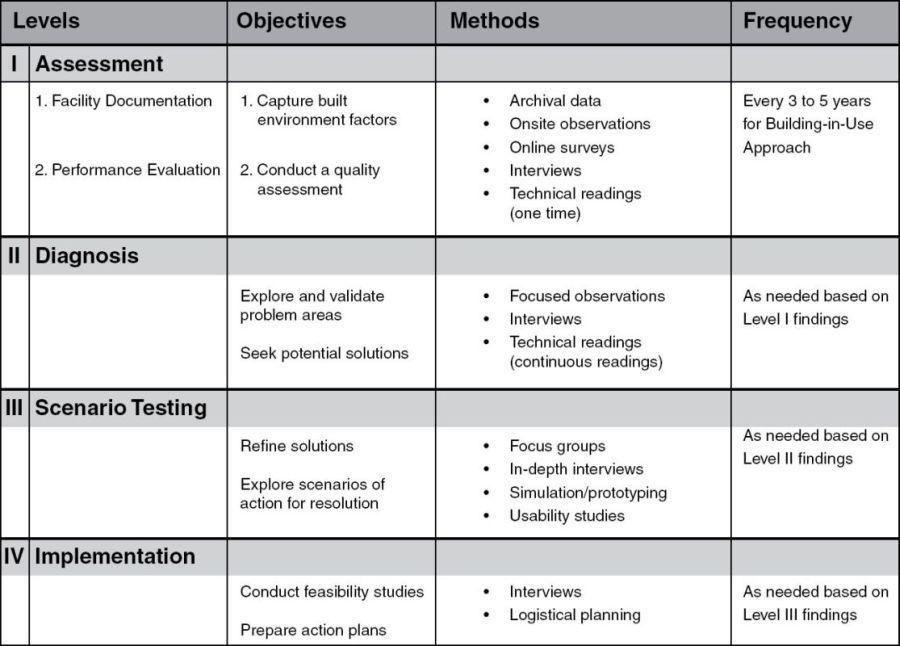

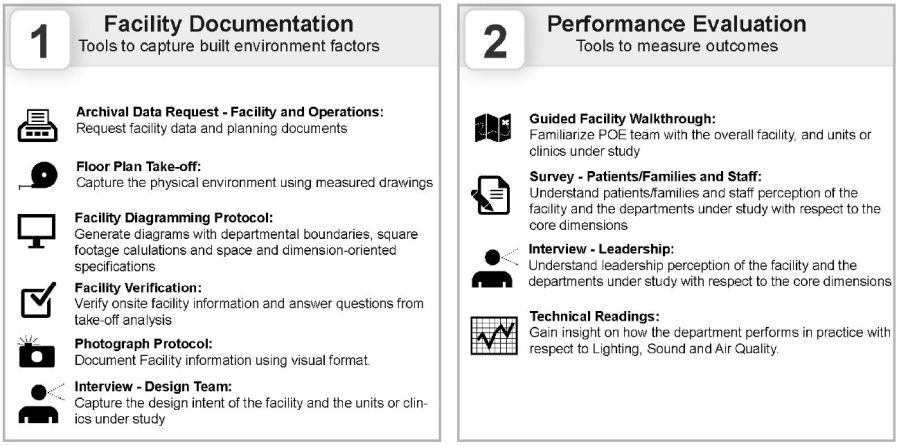

Moving forward, the team proposed a four-level quality improvement process for the evaluation as shown in Figure 22.1. The first level in the process, Assessment, involves a Facility Documentation step and a Performance Evaluation step which encompass a facility-wide assessment with focused inquiries in select units. The outcome from this initial assessment is the presentation of strengths and challenges of the facility design across the evaluation criteria. It is proposed that the quality assessment should be performed repeatedly to gather data, thus creating a dynamic facility profile that relates to WC performance benchmarks. The emphasis of the work to date, and focus of this chapter, encompasses this initial Level One Assessment.

FIGURE 22.1 Quality improvement POE levels

Source: Dina Battisto and Sonya Albury-Crandall.

The second level, Diagnosis, entails in-depth explorations to further understand problem areas identified during the Assessment level. For example, in a POE of an outpatient clinic, the exam room may have received a low satisfaction score from staff and/or patients that may be attributed to its size, configuration, equipment, or lighting specifications. Therefore, additional studies are conducted to diagnose and validate the problem as well as suggest the need to review related design guidance tools such as space planning criteria and room templates. During the third level, Scenario Testing, through the fourth level, Implementation, a team works toward seeking solutions to the identified problem areas and then develops an implementation strategy to improve problem areas. Following the resolution of the problem, the team returns to Level I to conduct a follow-up assessment to determine whether the design intervention was effective. The progression through the levels is repeated as necessary. In the next section, the Level I Assessment will be described in more detail.

Development of a standardized POE framework for assessing the quality of health care facilities

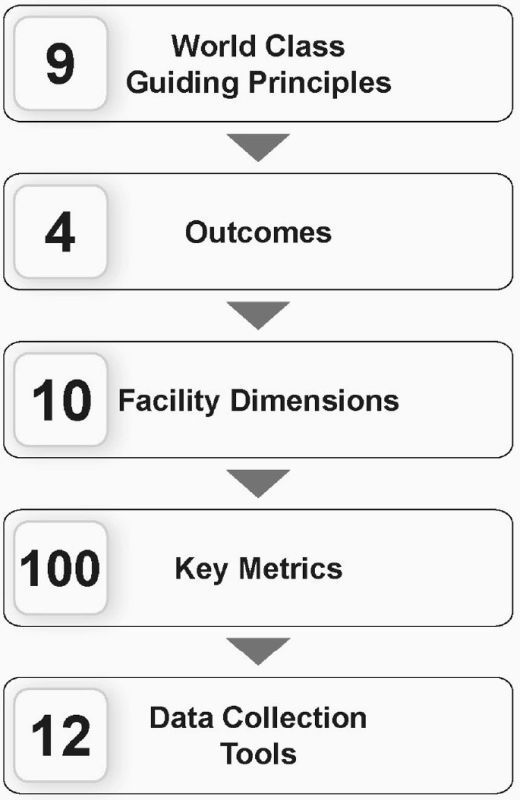

Once the QI POE approach was determined, the team worked to develop POE components informed by MHS world-class principles. The nine guiding principles provided the core values and starting point for the development of the POE toolkit. These principles also informed the ongoing refinement of facility guidance criteria, such as a WC design checklist (originally an evidence-based checklist), space planning criteria, and room templates. Since the MHS wanted to understand what works and does not work in a completed, occupied facility in relation to world-class directives as well as initial design and project goals, the POE method was selected for the Level I Assessment. The POE method builds upon the longstanding work of Preiser and colleagues (e.g. Preiser et al. 1988; Preiser and Vischer 2005; Mallory-Hill et al. 2012). More information on this method can be seen in Chapter 14 in this book. Currently, POE is at the forefront in knowledge generation as it provides an assessment of design related decision-making, recommended design strategies, design concepts, and final design solutions in relation to expected outcomes. Additionally, it allows for the comparison of predicted performance captured during the programming and design phases of an architecture process, with actual performance of a building in use being captured during the POE. While Preiser and Schramm proposed a building performance evaluation (BPE) approach (1997, 2012) as an all-encompassing life-cycle approach to assessing quality, this methodology was not possible since the building was already complete when the team was approached. Nevertheless, the development of the POE methodology and toolkit evolved in a hierarchical fashion whereby the WC principles were translated into quality assessment criteria and performance indicators (Figure 22.2).

FIGURE 22.2 POE components informed by MHS world-class principles

Source: Dina Battisto and Sonya Albury-Crandall.

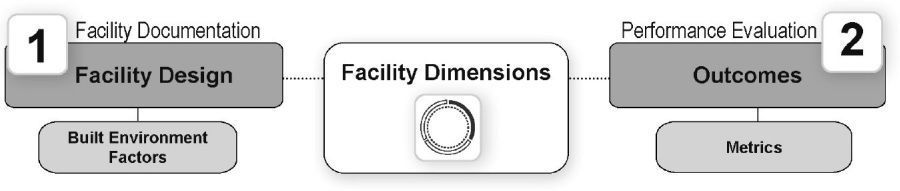

To conduct quality assessments, it is essential to integrate design and research thinking to identify relationships between facility design (what the architect has the ability to shape or create) and outcomes (an expected end result valued by a client). Figure 22.3illustrates a simple back and forth relationship between these two critical components. Building upon this premise, outcomes are defined at the beginning of a project and provide the basis for informing design decision-making. Along the way, the architectural team formulates claims or position statements aimed at predicting performance, in other words, how facility design solutions will contribute to achieving expected outcomes. After the building is completed and in operation, these claims are evaluated by measuring the actual performance and comparing findings to what was predicted earlier in the process. By applying research thinking, the actual performance of a building-in-use is evaluated using methods and metrics to reveal measured connections between built environment factors and expected outcomes. Findings from the Level I Assessment can then move forward to Level II Diagnosis to further validate the problem areas in the facility as well as feed lessons learned forward to inform future building projects or updates to POE methodologies. This process of using “measurement, evaluation, and feedback” is central to realizing a performance concept (Preiser et al. 1988).

FIGURE 22.3 Simplified performance concept to connect outcomes to facility design

Source: Dina Battisto and Deborah Franqui.

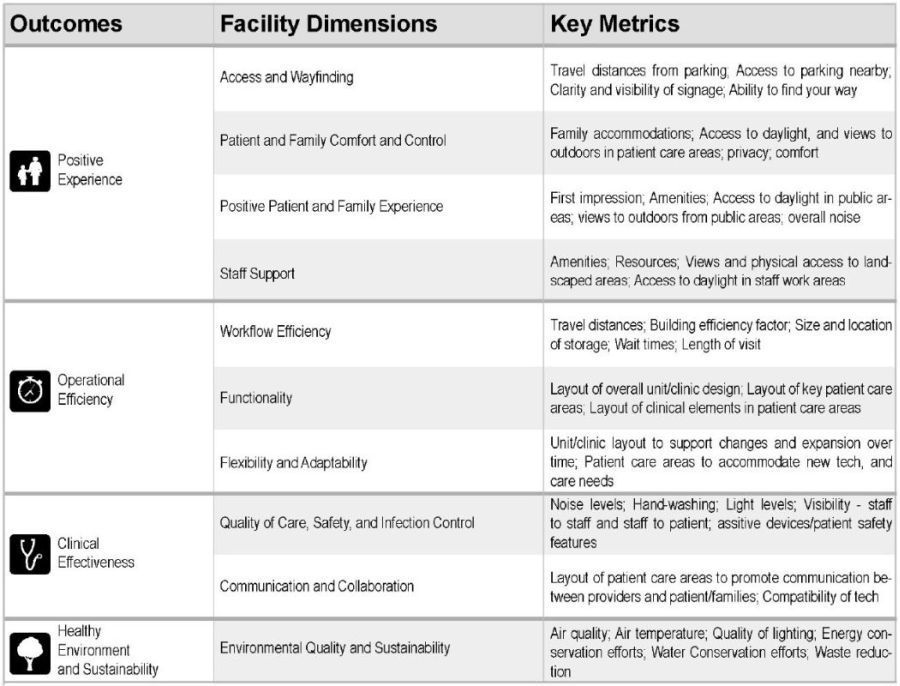

Building on this premise, a performance framework was developed to provide an organizational structure and a linear chain of logic from the beginning to the end of a building project. A framework includes three basic components: outcomes, facility dimensions, and built environment factors. First, the development of the framework starts with the identification of outcome areas. An outcome can be defined as an end result that can be measured, either subjectively or objectively. Outcomes are what a client values and hopes to achieve and can be organized in a hierarchical fashion to suggest priorities. An outcome is often significant to a building typology, a universal outcome, or significant due to the unique influences of a particular project, referred to as a project-specific outcome. Metrics are subjective or objective indicators or measurements that are used to assess how design solutions positively or negatively contribute to the achievement of the expected outcomes. Subjective or perceived metrics are data elements from users’ perception captured quantitatively using research methods such as surveys, or qualitatively using methods such as interviews or focus groups. Objective metrics are data elements captured through actual measurements from research methods such as floor plan take-offs, technical readings, and observations. Comparing perceived and objective metrics often provides the ability to triangulate data and strengthen conclusions.

Facility dimensions are the second component in the performance framework and serve as a lens or filter to elevate the most salient architectural design considerations related to outcomes of interest and are supported by relevant published literature. These facility dimensions are used to highlight what an architect or designer has the ability to influence and can be assessed along a quality continuum. For example, a desired outcome could be to achieve a “positive experience for customers” through the promotion of easy “access and wayfinding” (a facility dimension). If a person spends considerable time to get from the parking lot to the entrance, and has difficulty finding their way to their desired destination, then this will more than likely lead to a lower perceived experience by a customer.

FIGURE 22.4 POE performance framework

Source: Dina Battisto, Deborah Franqui, and Mason Couvillion.

The third component, built environment factors (BEF), include the physical attributes of a facility design that are expected to influence outcomes as shown in published empirical research or from professional experience. They represent the interpretation of a recommended design strategy or guideline manifested into a final design solution and can be captured in a qualitative or quantitative manner. Qualitative BEFs are best captured through diagrams representing the conceptual design solution implemented in the facility. Once the architect finalizes a design solution, it is advantageous for the design solution to be captured in written format for archiving purposes. Quantitative BEFs are best captured through design variables that are measured using various methods such as floor plan take-offs (i.e. space allocation, travel distance, and net-to-gross factors).

To tie front-end thinking and back-end thinking together in the MHS POE, the team developed a customized POE framework, as shown in Figure 22.4, that is organized around four outcome areas: positive experience, operational efficiency; clinical effectiveness; and healthy environment and sustainability. Additionally, there are ten facility dimensions aligned with these outcome areas; and corresponding metrics or indicators used to measure performance.

Development of a standardized POE methodology for assessing the quality of health care facilities

To address the issue of standardization and comparability of data across study sites, a two-step process was developed for the Level I Assessment including facility documentation and performance evaluation. The first essential step of the POE, facility documentation, is a systematic process to capture the qualitative and quantitative aspects of the built environment factors, aligned with expected outcomes using a performance framework. This step ideally should start at the beginning of a project in an effort to consistently capture, in a predefined format, data elements corresponding to the planning, programming, design, and construction steps of a building project. However, since it was decided to conduct this evaluation after the building was occupied, facility data had to be collected retrospectively. Six essential data collection tools were developed for capturing facility data. The use of diagramming is a useful visual approach to capture qualitative, holistic data such as design concepts or more concrete design solutions such as facility or unit layout. In contrast, quantitative data are best represented using data tables and displays. Measurements such as area sizes, net-to-gross factors, and travel distances collected from floor plan take-offs are examples of the types of data that lend themselves to objective data tabulations and comparisons.

The second step, performance evaluation, is a systematic process of assessing the effects and benefits of facility design, or qualitative and quantitative built environment factors, using a POE methodology encompassing a variety of data collection tools noted in Figure 22.5. In order to carry forward lessons learned from the performance evaluation, a third step should be considered, a translation step. During this step, a research and design team translates lessons learn from the Level I Assessment in order to feed forward findings to other comparable facilities, utilize findings to help validate and then correct problems identified within a studied facility (Levels II through IV) as well as refine the POE methodology.

Development of standardized POE metrics and tools for assessing the quality of health care facilities

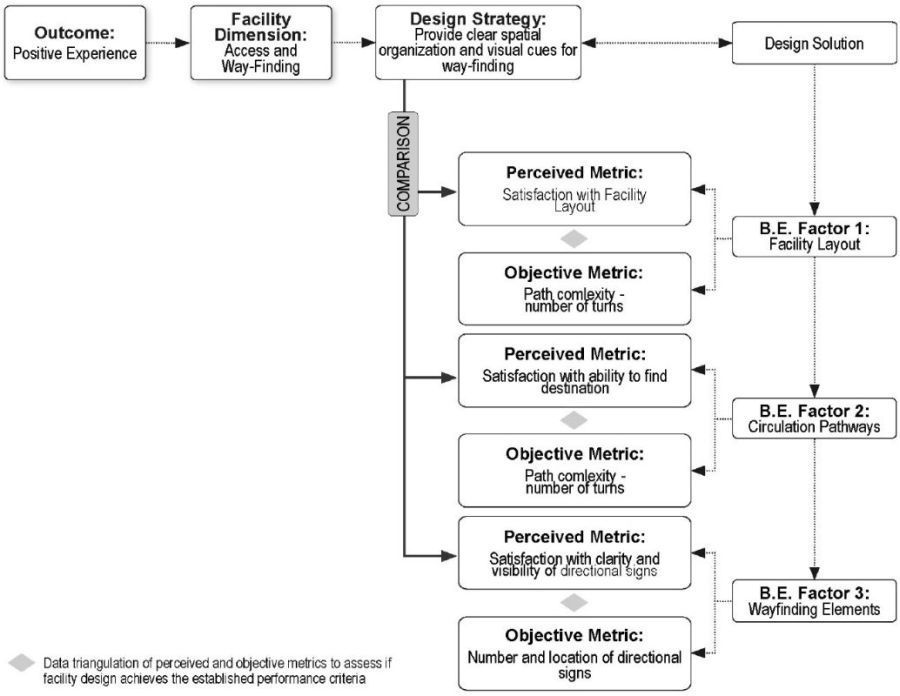

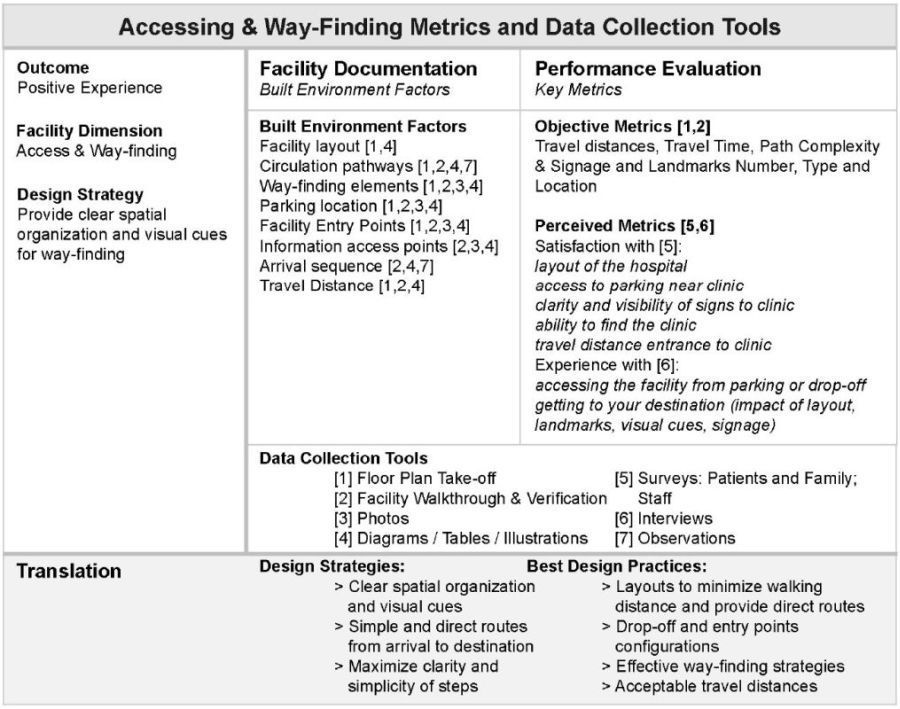

The ten facility dimensions are formal groupings used to organize the POE data elements, methodology, and tools. Overall, the team identified more than 100 metrics across the ten dimensions which are used to conduct the quality assessment of the select inpatient and outpatient departments in the health care facilities. Once the set of key metrics were defined, the tools were developed. Given the complexity of this project, the access and wayfinding dimension will provide an example to illustrate how built environment factors can be linked to subjective/perceived and objective metrics as depicted in Figure 22.6.

The complexity of environmental research warrants a multi-method approach to studying the relationships between outcomes and built environment factors. Figure 22.7 presents an example of how the outcome (positive experience) is studied in relation to the facility dimension (access and wayfinding). This is achieved by connecting relevant built environment factors captured during the facility documentation step and subjective and objective metrics studied during the performance evaluation step. This example also shows an example of the metrics and data collection tools for the access and wayfinding facility dimension. The overall goal is to understand how a positive experience can be achieved through effective access and wayfinding design strategies. Thus findings from the POE are translated into best practice design strategies synthesized during the translation step.

FIGURE 22.5 POE phases and data collection tools

Source: Dina Battisto, Deborah Franqui, and Mason Couvillion.

FIGURE 22.6 Example of metrics for access and wayfinding

Source: Dina Battisto and Deborah Franqui.

FIGURE 22.7 Example of data collection tools and metrics for access and wayfinding

Source: Dina Battisto and Deborah Franqui.

Lessons learned for quality assessments in health care environments

The aim of the MHS POE project was to develop and pilot test a facility evaluation methodology and toolkit that aligned with established world-class criteria. The results from the process yielded some interesting findings that are applicable to both military and civilian medical facilities. Below are recommendations from lessons learned from the two pilot studies.

1 Identify an approach based on POE objectives – a quality improvement (QI) intent aimed at problem-seeking and problem-solving versus a research project aimed toward producing generalizable knowledge. It is recommended that a research proposal only be considered when a specific hypothesis is to be tested, or to establish a generalizable practice standard where none is already accepted. Otherwise, it is recommended that the POE should follow a QI strategy. Another important recommendation is to embrace a joint effort with the local site team, where “POE champions” serve as the site team leaders and distribution source for information and coordination. POE champions are instrumental in facilitating local site briefings, securing executive level approvals, and scheduling in person interviews and collecting all background information. It is further recommended to avoid the collection of sensitive data such as quality and safety information – falls, medical errors, and infection rates – which are more aligned with focused research studies and could potentially delay the process.

2 A post-occupancy/building-in-use inquiry is good for the “after the fact” performance evaluations (Preiser et al. 1988). As a research method, POE is defined as a “process of evaluating buildings in a systematic and rigorous manner after they have been built and occupied for some time” (Preiser et al. 1988). In the case of the MHS, building performance is measured and compared to the world-class criteria and the results of the evaluation can be fed forward to update planning and design tools, such as space planning criteria, programs for design, and room templates.

3 It is important to establish an organizing structure, specifically a performance framework that includes outcomes, related facility dimensions, and metrics to assess the performance of a final design solution captured through built environment factors. It is recommended that a performance framework be developed for the unit of analysis and a specific department type (or facility type), in an effort to identify best practices or worst practices.

4 It is recommended to select and define a set of common metrics to generate a facility database repository that can be used to identify performance targets and benchmarks. One of the biggest lessons learned involves the need to establish priorities for the POE with a particular emphasis on defining data elements that can be compared across similar units or facilities, i.e. universal data elements, and others that may be specific to a project or service line, namely project-specific data elements. When data elements can be studied across units as well as at the hospital level, trends can be identified so the organization can define best practices worthy of repeating at the system level or other practices that require additional study. A challenge encountered during the two pilot studies was that some of the units and service lines were unique to that particular facility and therefore the transferability of findings was questionable. One of the considerations for the future is to determine beforehand whether a site or service line is so unique that the findings may not be readily comparable to another site, thus limiting the utility of the POE findings for broader applications.

5 A standardized process and set of tools that can be replicated are critical to ensure comparable data across similar projects and service lines. While POE has stimulated bursts of activity over the years and has many followers, the biggest criticism is the “lack of indicators and accompanying benchmarks” to establish what constitutes a “good building” (Zimmerman and Martin 2001). Since POE typically involves the use of multiple methods similar to case studies, the same methods, tools, and metrics are typically not used consistently across each evaluation. Therefore, findings are difficult to compare objectively across facilities (Bechtel 1997). This has been an ongoing limitation of the POE method resulting in less application than deserved. Without a unifying performance framework that is adopted for building type-specific projects, and a set of standardized process, metrics, and tools, the POE method has limited potential for the development of a coordinated database and benchmarking.

6 Findings should be translated into evidence-based planning tools. Medical facilities planned and designed for the MHS are informed by recommended and required guidance criteria. Recommended criteria developed by the MHS include space planning criteria and room templates. Many of these tools are currently being revised and expanded upon to comply with the latest world-class criteria. In addition to the recommended criteria, design teams for new MHS facilities are required to comply with certain codes and mandates. A standardized POE process and toolkit implemented at multiple comparable facilities would yield valuable findings for updating these planning and design tools. Therefore, the POE process should align with existing planning and design guidelines and tools to assess the merits of these recommendations. In addition to universal guidance criteria, the MHS has project-specific criteria to guide the planning, design, and project implementation process of a particular facility such as a program for design (PFD), concept of operations (CONOPS) manual, the operational process plan, and the functional concept manual. These documents were valuable for capturing the initial intentions of the facility design and are used in conjunction with the evaluation. It is recommended that civilian facilities also archive up-front planning and design information in a consistent format.

Conclusion

This chapter presented a performance framework, a POE methodology, and lessons learned from a multi-year effort to develop a standardized approach for the military health system to conduct quality assessments in health care environments. The MHS claims that evidence-based planning and design criteria should be informed by findings discovered from a structured POE process and toolkit. As one of the largest health care systems in the world – the MHS manages over $30 billion of physical facilities including 660 outpatient clinics and 56 hospitals – there are huge incentives for building a knowledge base to inform front-end thinking. It is envisioned that developing a standardized POE approach can help yield findings that inform the development of space planning criteria, design checklists, room templates and codes that are evidence-based. Finally, the adoption of a standardized POE process with a common set of metrics may yield a facility database that can reveal objective best practices, benchmarks, and targets.

Acknowledgments

The authors wish to acknowledge the POE team including: Health Affairs/Portfolio Planning – Michael Bouchard; Noblis – Sharon Steele; Clemson University/NXT: Mason Couvillion, Salley Whitman, Justin Miller, and Dianah Katzenberger; Joint Task Force Capital Region (JTF Cap Med) – Lt Col Weidman and Patricia Haley; USA Health Facility Planning Agency – Trillis Birdseye, Pamela Ferguson, and Brenda McDermott; US Army Corps of Engineers – Antony Travia and Gary Spivey; Community Hospital at Fort Belvoir – LTC Gantt – POE Champion and Lead Site Investigator and HDR – Barbara Dellinger, Julian Jones, Erin Viviani, and Kelley Dorsett. Finally, the authors would like to thank Elizabeth Cooney for help with the graphics in this chapter.

References

Bechtel, R. B. (1997) Environment and Behavior: An Introduction. Thousand Oaks, CA: Sage Publications.

Kizer, K.W., M. McGowan, and S. Bowman (2009) Achieving World-Class: An Independent Review of the Design Plans for the Walter Reed National Military Medical Center and the Fort Belvoir Community Hospital. Falls Church, VA: National Capital Region Base Realignment and Closure Health System Advisory Subcommittee of the Defense Health Board.

Mallory-Hill, S., W. F. E. Preiser, and C. Watson (eds) (2012) Enhancing Building Performance. Oxford: Wiley-Blackwell.

Preiser, W. F. E., H. Z. Rabinowitz, and E. T. White (1988) Post-Occupancy Evaluation. New York: Van Nostrand Reinhold.

Preiser, W. F. E. and U. Schramm (1997) “Building Performance Evaluation.” In D. Watson, M. J. Crosbie, and J. H. Callender (eds), Time-Saver Standards for Architectural Design Data (7th edn). New York: McGraw-Hill, pp. 233–38.

Preiser, W. F. E. and J. C. Vischer (eds) (2005) Assessing Building Performance. Burlington, MA: Elsevier Butterworth-Heinemann.

Zimmerman, A. and M. Martin (2001) “Post-Occupancy Evaluation: Benefits and Barriers.” Building Research & Information 29(2): 168–74.