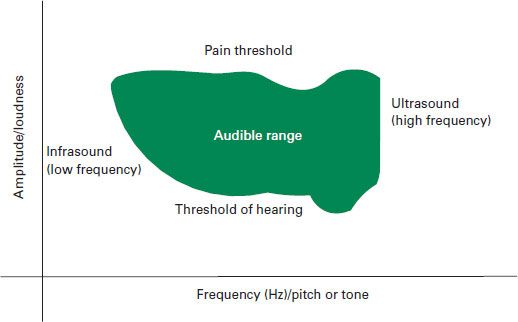

HUMAN EARS ARE SENSITIVE TO SOUND in different degrees along the frequency range. We are most sensitive to mid-frequencies and it is not surprising that our ears are built to better understand sound in the range that we talk. The sensitivity to different frequencies also varies by the sound pressure level. As the level increases the differences flatten out. Figure 3.1 shows the human hearing range, from threshold of audibility to threshold of pain.

The human hearing range goes from approximately 20 Hz to 20,000 Hz. Below and above that range sound is inaudible for humans, but it might be audible for some animals, for example, the frequency used by bats to locate themselves (echolocation) or the frequency used for dog whistles.

3.1 Human audible range

Table 3.1 Permissible noise exposure in hours per day at a certain sound pressure level (OSHA 1919.95)

Duration per day | Sound pressure level (slow response) |

h:mm | dBA |

8:00 | 90 |

6:00 | 92 |

4:00 | 95 |

3:00 | 97 |

2:00 | 100 |

1:30 | 102 |

1:00 | 105 |

0:30 | 110 |

0:15 or less | 115 |

Humans also have an audible range of amplitudes that goes from very faint around 0 dB to painful at about 130 dB. Above the threshold of pain, our ears can be damaged permanently in a matter of seconds. Long exposures at lower amplitudes can also be damaging to our ears. Table 3.1 contains the limit time exposure at each SPL level per day dictated by OSHA. Note: Permissible levels in Europe are more stringent, with 6 hours at 80dB, 2 hours at 95dB, or 45minutes at 90dB, being sufficiently high exposure times to trigger requirements for noise assessment and hearing protection.

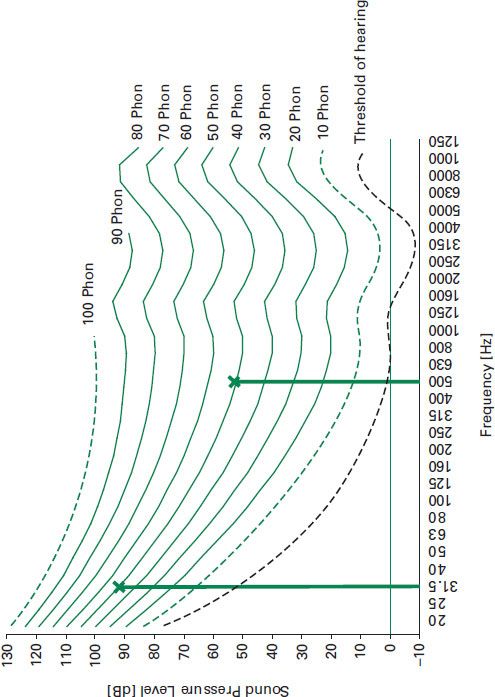

LOUDNESS IS THE SUBJECTIVE IMPRESSION of level (what we commonly call “the volume” of a sound). Our ears are created to respond differently to sound at different frequencies, so two sounds at the same pressure level will not necessarily have the same loudness to our ears.

3.2.1 Equal loudness contour

Figure 3.2 defines the loudness of a specific sound. Each curve is associated with a loudness, defined in Phon. It is easy to see that the shape of the curves corresponds with the threshold of audibility, becoming flatter as loudness increases. It can also be observed in the figure that the human ear has very low sensitivity at the low frequencies. For example, a pure tone at 31.5 Hz with 95 dB of amplitude will sound as loud to our ears as one at 500 Hz with 60 dB. Both are 50 Phons.

3.2 Equal loudness contours (based on ISO 226:2203)

3.3.1 Localization

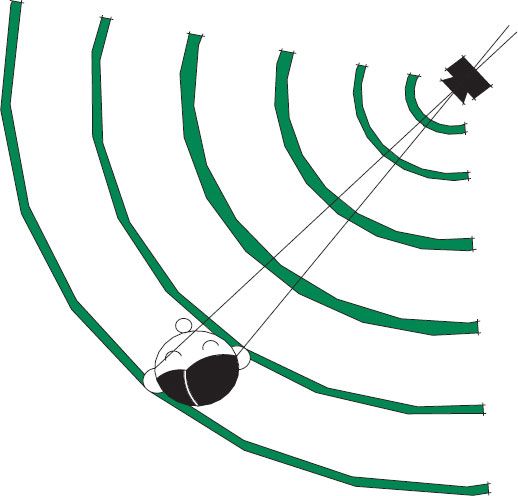

Thanks to the fact that humans possess binaural hearing (those without hearing disabilities, including deafness in one or both ears), we are capable of localizing sound. This ability equates to having three-dimensional sight, or the ability to see depth due to the fact that we also have two eyes. Our ears give us the ability to localize sound in the horizontal plane up to 1 degree of precision. What happens in the vertical plane, though?

Our ears are located on both sides of the head, making it difficult to locate sounds that come from an equidistant position to both ears. This process of comparing two different signals received by the two ears happens in the brain before we can point to the location of the source. The brain looks basically to two characteristics of those signals. Interaural Level Differences (ILD) refers to the difference in level between the two signals and it is produced by the shadowing effect of the head to the ear farthest from the source, as well as the slight drop in level from traveling farther. Interaural Time Differences (ITD) refers to that difference in arrival time to both ears. Even though we can’t consciously say how different those signals are, or even perceive them as two different signals, our brain is capable of processing the differences and giving us an answer regarding location.

In the vertical plane, however, our brain uses frequency cues to localize sounds, and this phenomenon is not as effective as the ITDs and ILDs on the horizontal plane (Figure 3.3).

Going back to the comparison with our visual sense, we notice that our eyes get the illusion of fluid motion in an animation of as low as 20 frames per second. That means that our eyes cannot tell the difference between 50 millisecond (ms) periods. Now, sound travels at about 1,115 ft/s (340 m/s) and the difference between our ears would never be traveled in more than 1 ms. So, how can these very small differences allow us to have such precision in localizing sounds?

3.3 Binaural hearing

3.3.2 Precedence effect

Imagine a situation where sound is being played back through two loudspeakers located exactly at 45 degrees left and right in front of you. Your ears are both going to perceive exactly the same signal, because it is coming at equal times and at equal levels to them. Your brain, then, will conclude that there is only one loudspeaker located exactly in front of you. If we induced a delay of 40 ms in the left-side loudspeaker, we still cannot hear two signals, because the separation between them is not enough. However, our brain will identify the right side as the origin of sound and the left side as a reflection, thus localizing sound on the right side. Furthermore, if we raise the level of the left-side loudspeaker up to 10 dB, our brain will still be convinced of the origin of the signal being in the right side. These experiments were performed by Helmut Hass, who arrived at the conclusion that ITDs are more defining to sound localization than ILDs, and so called it the precedence effect.

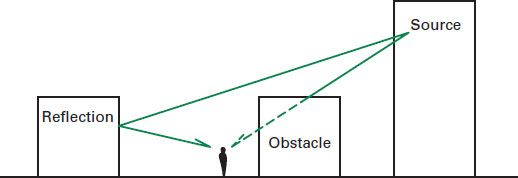

In real life we will always receive the direct sound before any reflection, and in most scenarios we will receive the direct sound at a higher level than the reflections. This is due to the fact that reflections will travel longer, losing some energy along the way, as well as part of their energy being absorbed by each obstacle they strike. There are rare cases, though, where the most direct path that the sound could take is blocked or shielded by an intervening structure. This means that a secondary sound path that may have reflected off another structure is the first sound we hear. As the reflected sound is now the dominant sound, we assume that this dictates the direction of the source. This is referred to as displacement, and requires that reflection to have a significant pressure level above the mitigated direct sound (Figure 3.4).

3.4 Displacement of the localization of the source

The sound will be perceived as arriving from the opposite direction, since the direct sound path is blocked.

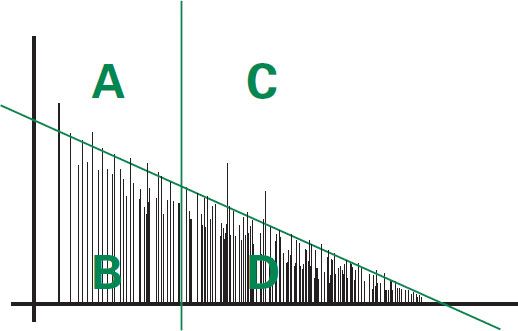

Figure 3.5 (page 42) illustrates the following:

A | Reflections that come before 50 ms, and are significantly louder than the normal decay, could produce displacement of the source. |

B | Reflections that come before 50 ms, and are at or below the normal decay, amplify the direct sound and reinforce the signal. |

C | Reflections that come after 50 ms, and are significantly louder than the normal decay, are distinctly heard as echoes. |

D | Reflections that come after 50 ms, and are at or below the normal decay, are reverberation. |

3.5 Reflectogram

Based on Figure 3.5, an echo (i.e., a distinct repetition of the signal) is a late reflection of sound that hasn’t lost much energy (e.g., it only reflected once in a very reflective wall that is far away from the source). This is the reason why the back wall of venues is usually absorptive.

3.3.3 Cocktail party effect

Another effect that is enhanced by our binaural hearing is the cocktail party effect. This is our ability to discriminate one signal among many others, as in a cocktail party, where you can carry a conversation even though there are many others happening around you, and some of them louder. You can also decide to listen to another conversation that is happening in the room, and realize you can do it. A related phenomenon describes how your brain is “pulled” to hearing an unintended signal when something of particular interest to you is mentioned (e.g., your name), or how a non-native speaker is pulled by a conversation in his or her own native language that is happening in another part of the room.

IN THE ENVIRONMENTS WE INHABIT, there is always more than one sound arriving at our ears. We usually talk about signal and noise as a way of dividing those that we are interested in and those that we don’t want. Noise is basically unwanted sound. But whether we want it or not, our brains are still processing all sound that arrives at our ears, and they will have an effect on what we finally hear.

Sounds have the ability to mask other sounds, especially if they are louder or lower in frequency. Sound masking can be a problem or a benefit, depending on how and where it happens.

If you are trying to have a conversation on the phone and the air conditioning kicks in with a loud rumble, you will probably experience unwanted masking. You realize that you have to make a bigger effort to hear the person on the other side of the line than you were doing so far. Now, imagine that you are sitting in a cubicle and the person in the next cubicle is having a conversation on the phone. When the air conditioning kicks in, it drowns the sound from their conversation and now it is easier for you to concentrate on your work. This would be desired masking.

Even though we strongly suggest that all background noise is undesirable, there are very specific cases where privacy is a priority and isolation between the people or groups of people is not possible, so a masking system is the solution to create privacy. This is very common in office environments, but it has also been implemented in hospital waiting rooms and other scenarios requiring privacy.

Further reading

Blauert, J. (1997) Spatial hearing: The psychophysics of human sound localization. Cambridge, MA: MIT.

Moray, N. (1959) Attention in dichotic listening: Affective cues and the influence of instructions. Quarterly Journal of Experimental Psychology 11(1): 56–60. doi:10.1080/17470215908416289.