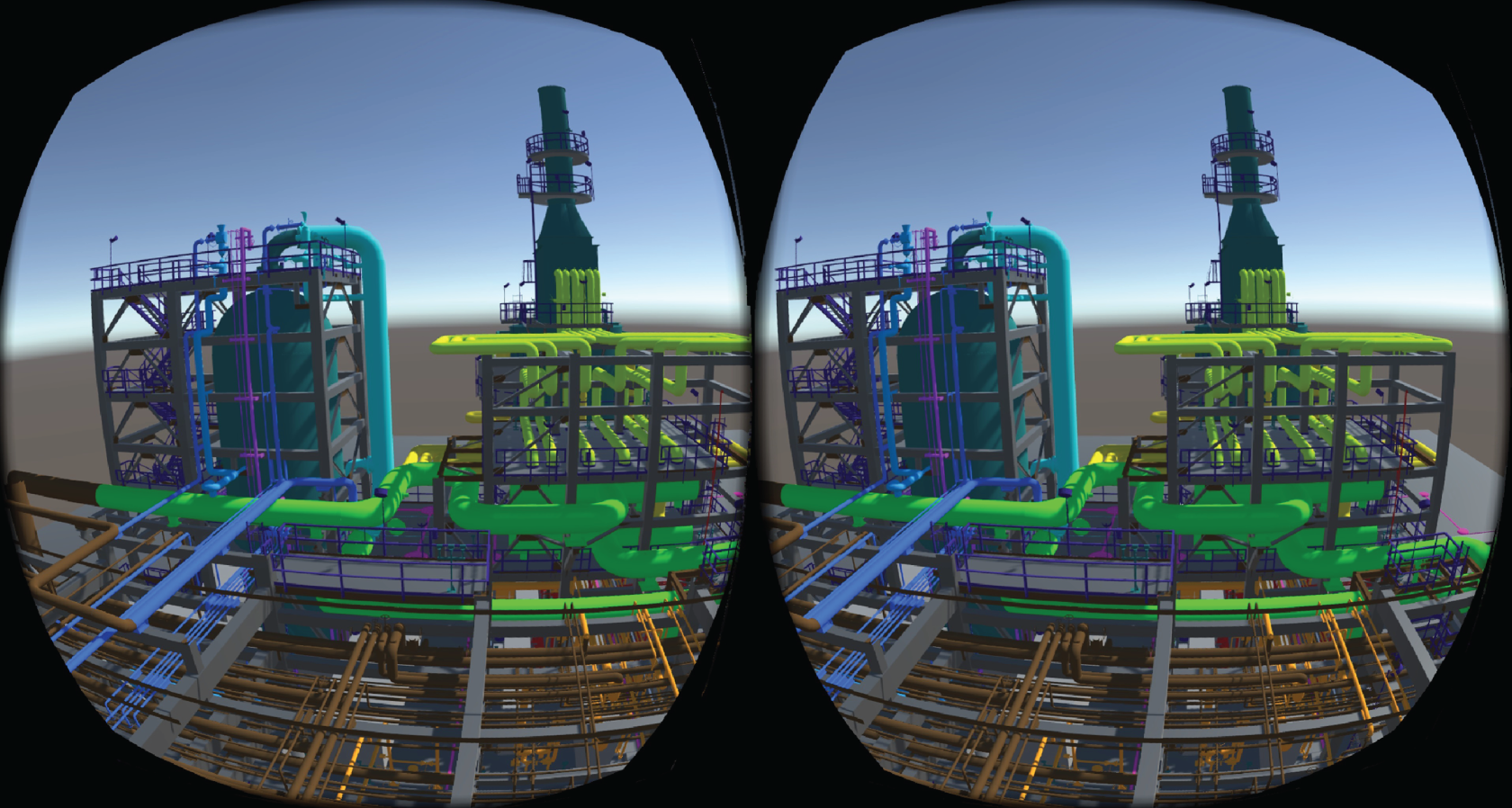

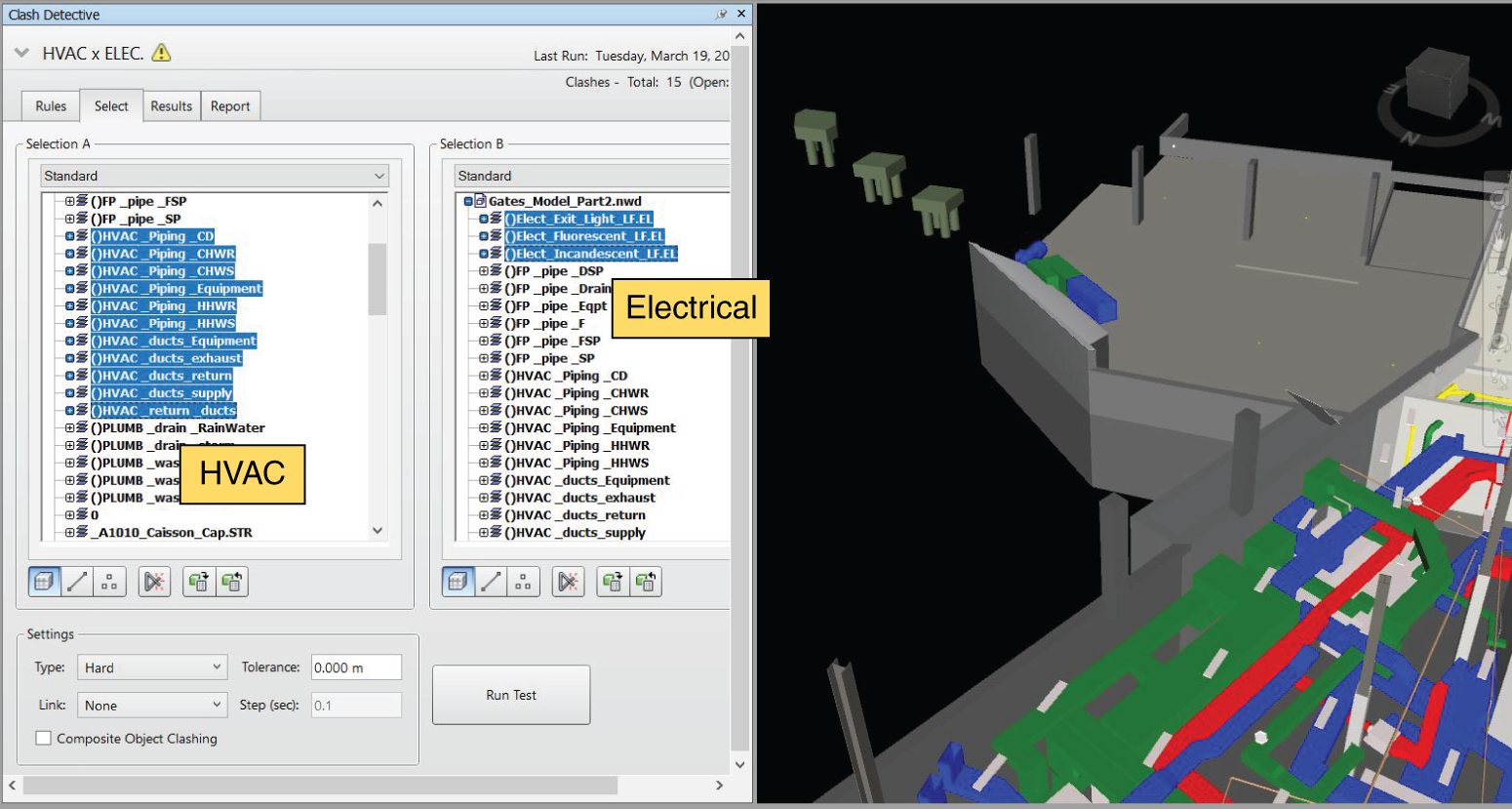

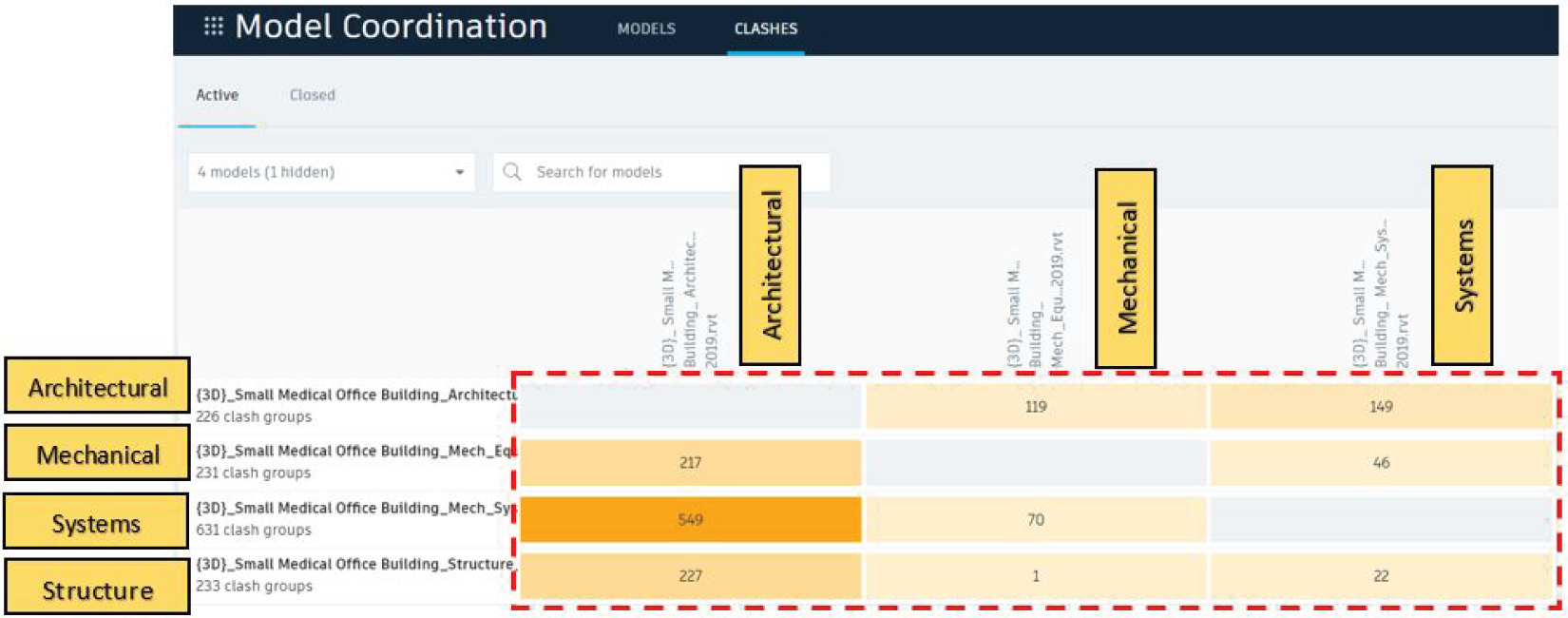

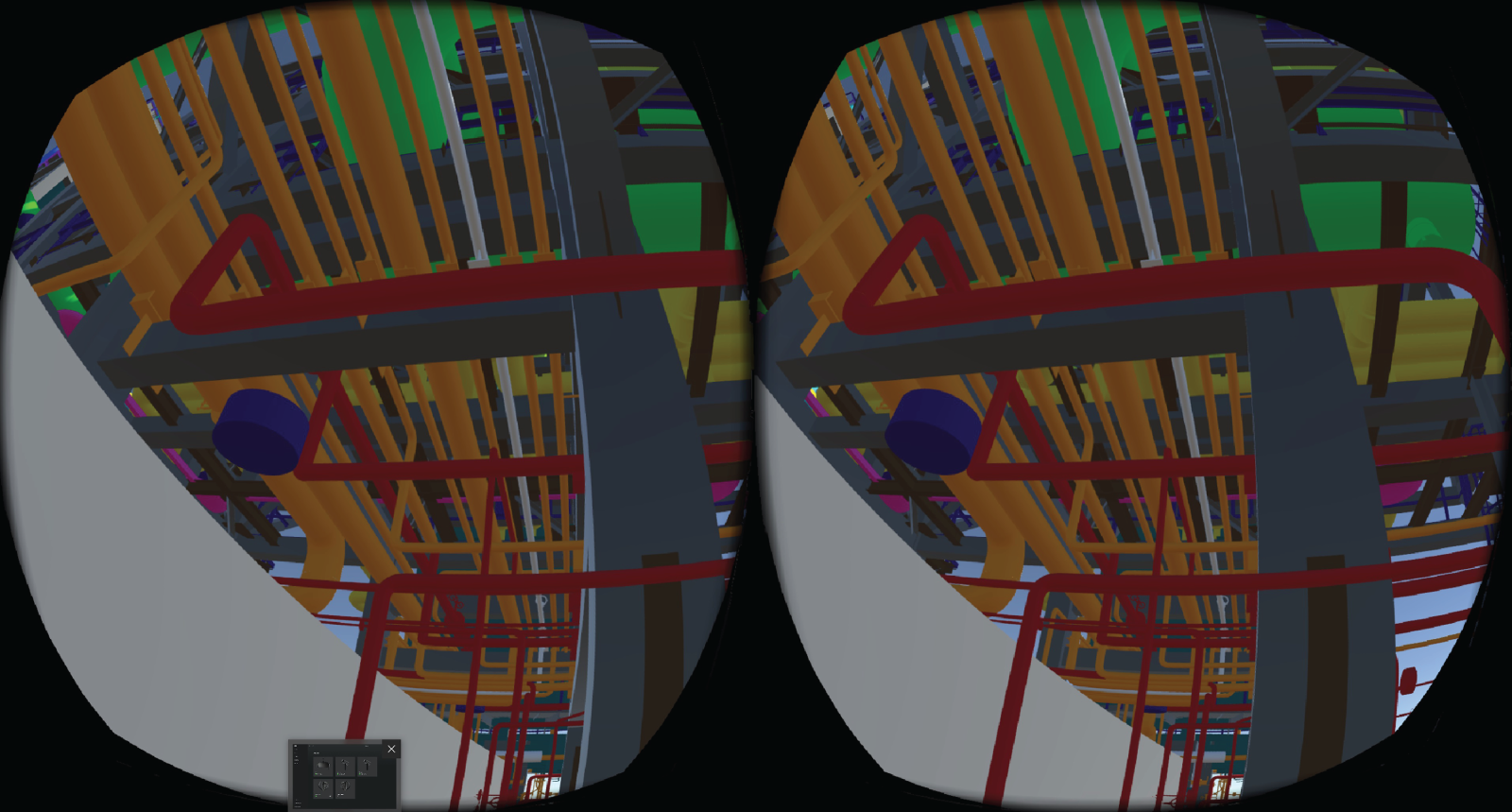

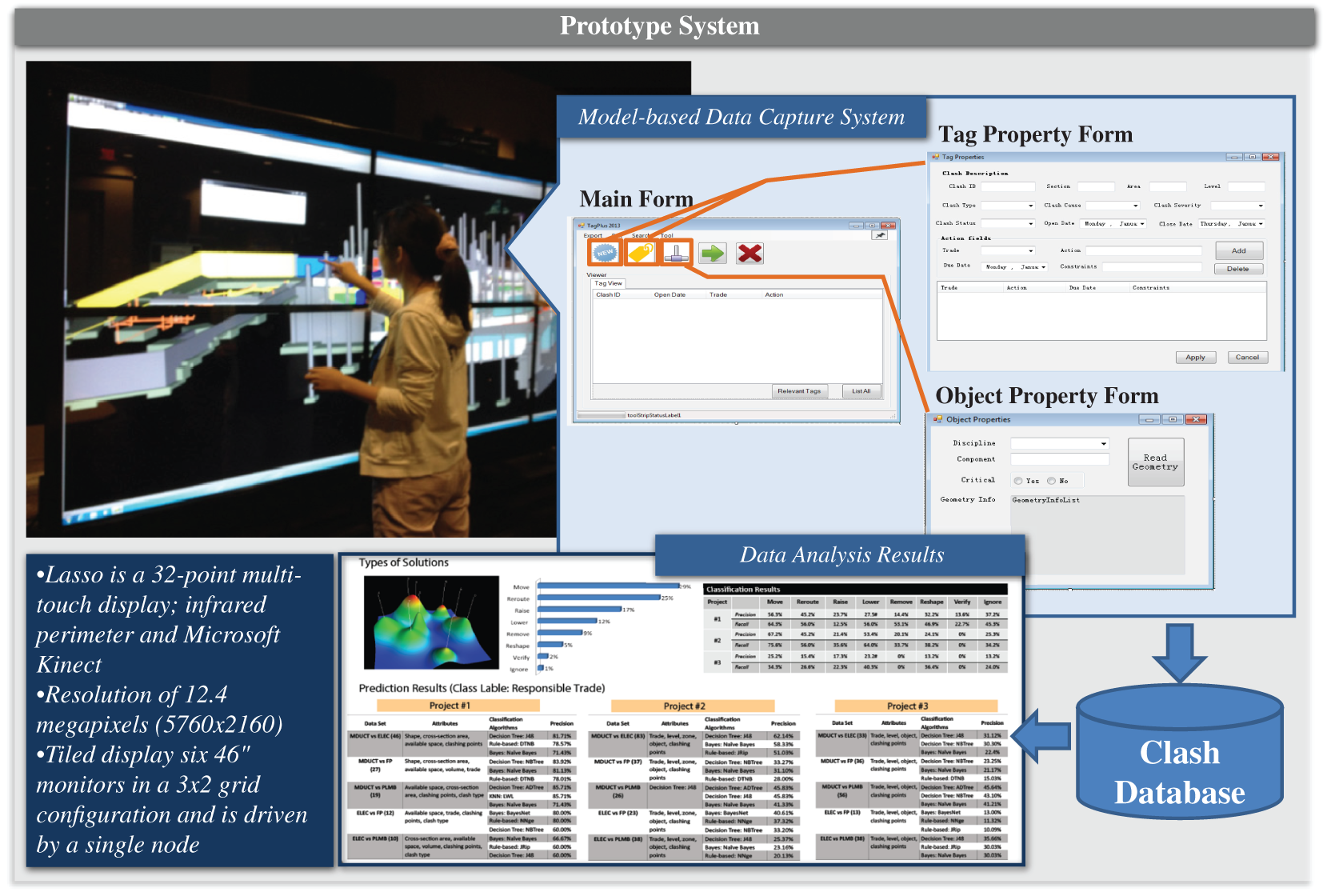

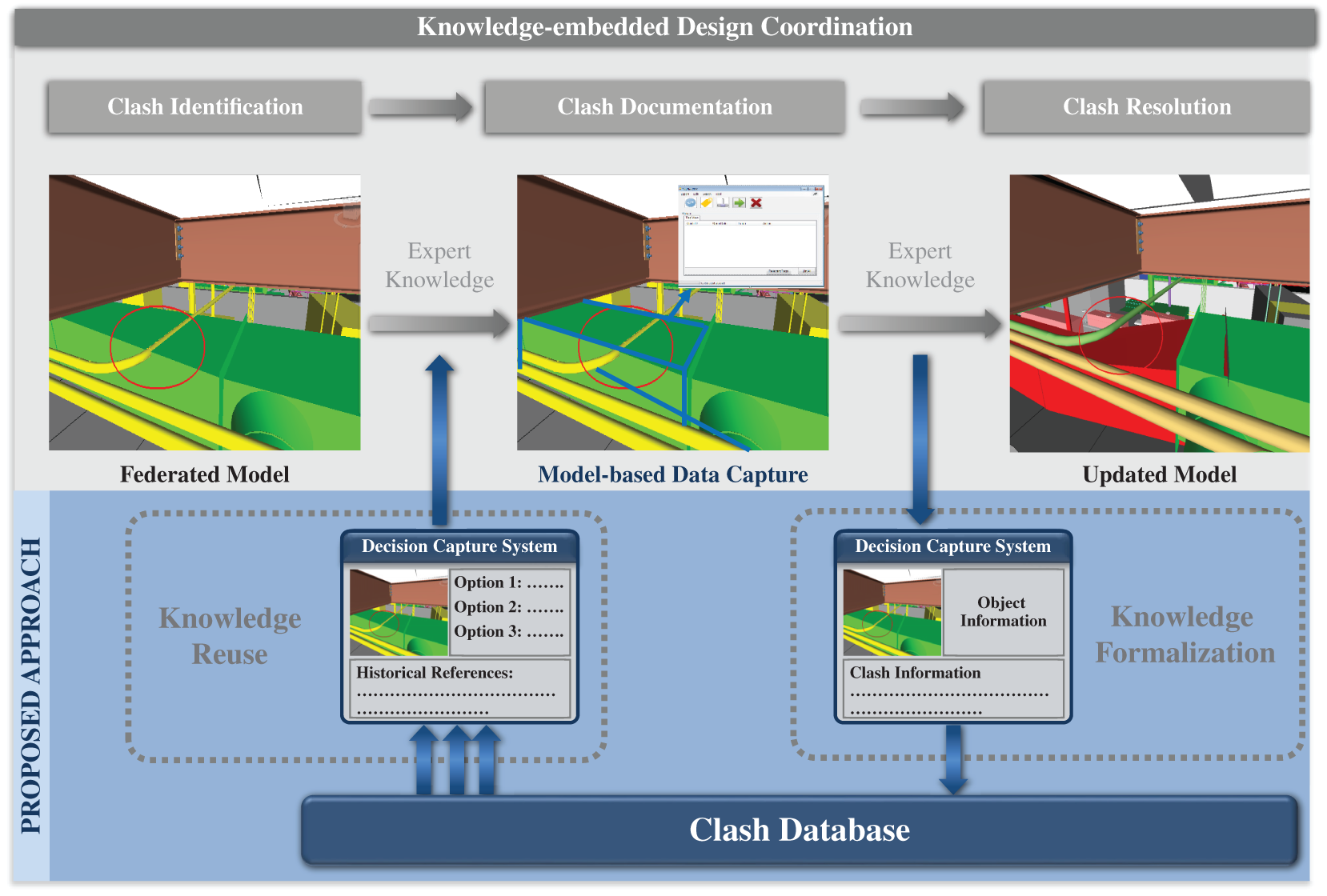

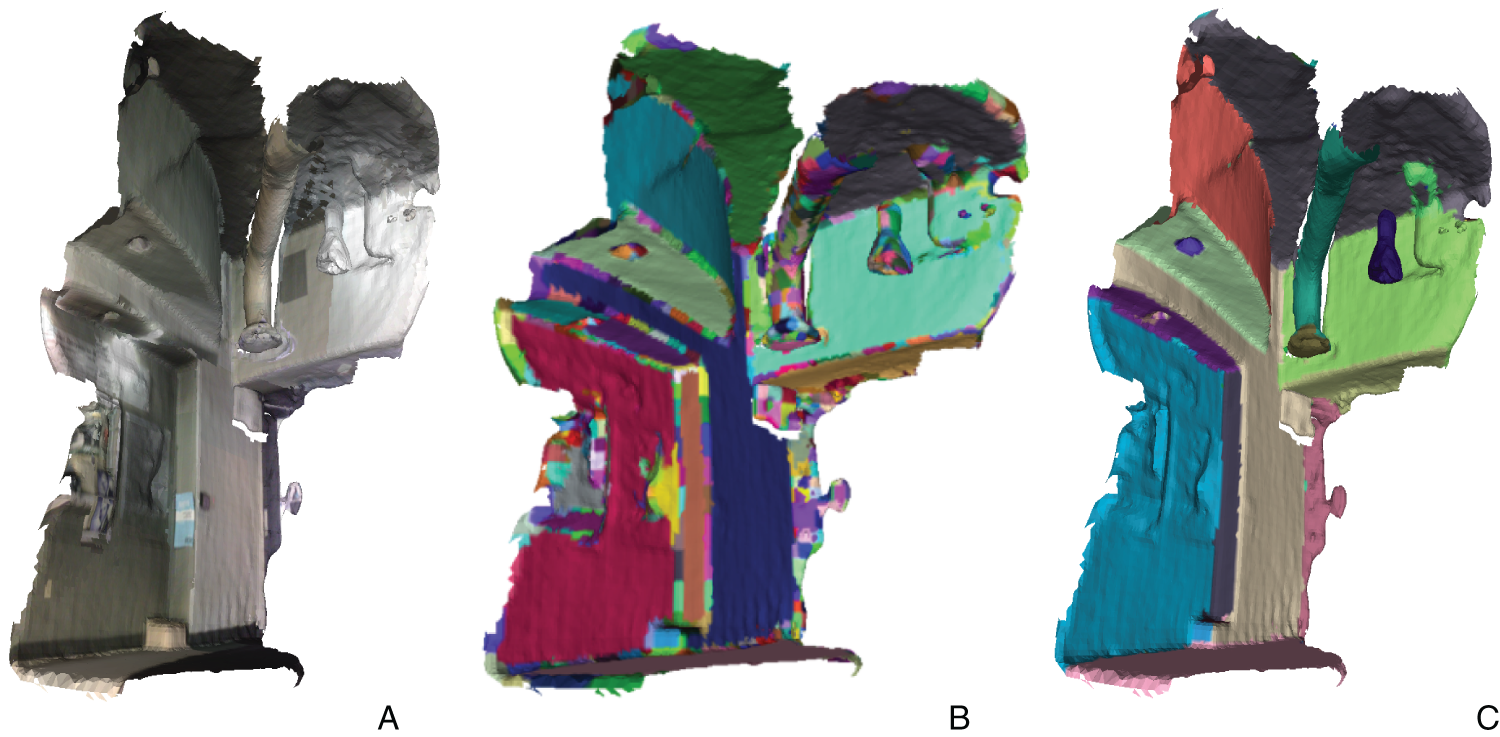

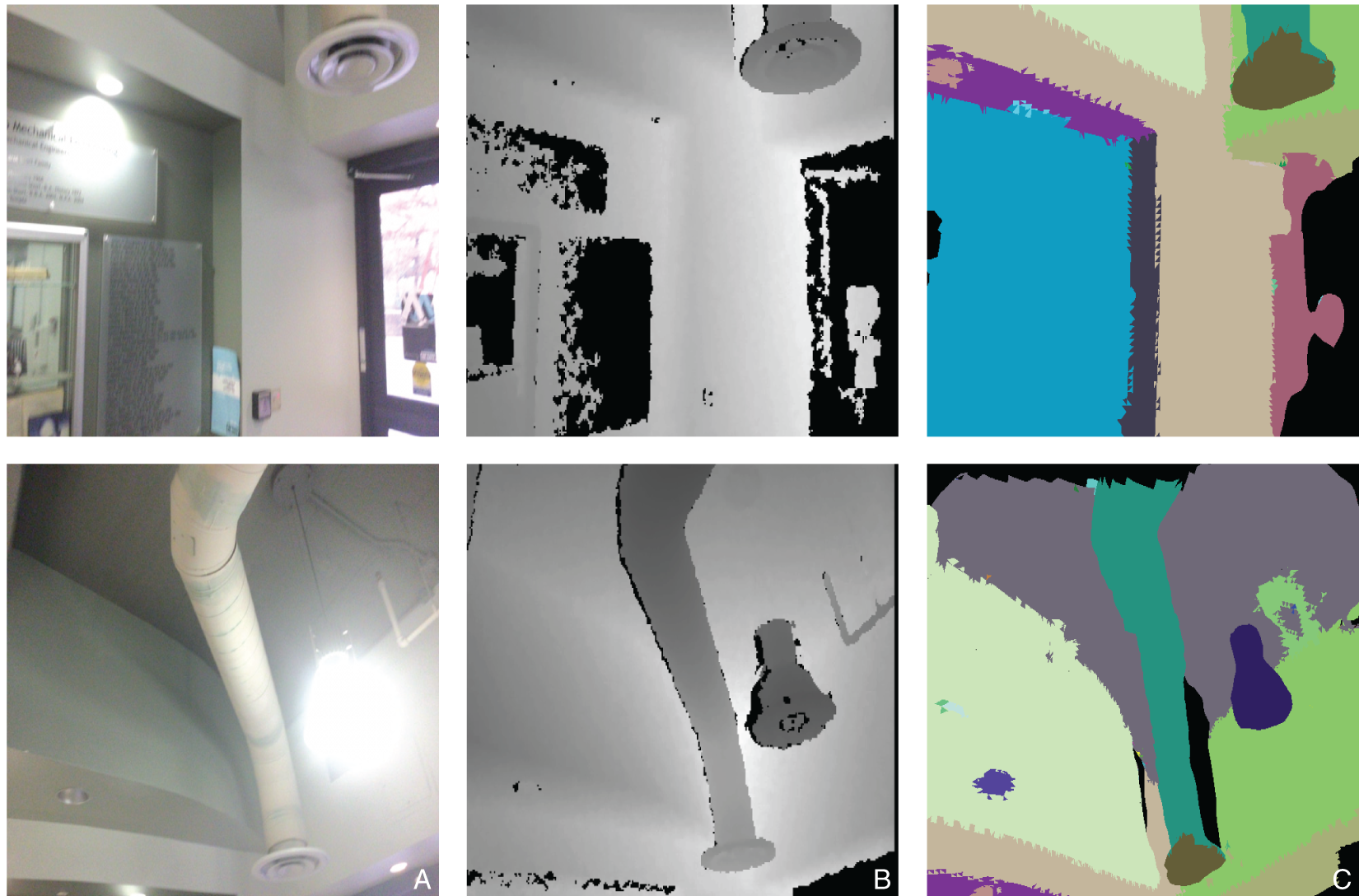

With advancements in software and hardware technology, our current building information modeling (BIM)-based design coordination processes will likely drastically change in the next decade. Rather than having to develop approaches to federate data from multiple disciplines, group clashes, or develop a sequence to evaluate clashes, one can envision an approach—not too far-fetched—in which artificial intelligence is leveraged and much of the data preparation and analysis that we plan for today will not be needed. This chapter attempts to discuss a vision for the future of virtual design and construction as a whole. I cannot predict what the future holds, especially since technology and its adoption evolve quite rapidly. I can, however, reflect on what I have been observing and how that has impacted my research agenda. Some of this chapter (sections 10.2.2 and 10.2.3) is based on Leite (2018), published by Springer and granted copyright clearance to be published in this book. In the preceding chapters of this book, I covered guidelines on setting up a project for successful BIM-based design coordination, the importance of considering model quality and level of development (LOD), how to carry out a successful design coordination session, and specific guidelines for key stakeholders typically involved in design coordination. But with so many new technologies being implemented or piloted in the construction industry, the playing field could drastically change within the next decade. Much of what we see now in BIM-based design coordination is in many ways a replication of 2D design coordination on a light table, where two trades at a time would coordinate their scopes of work. Design coordination software replicates this by having users carry out pair-wise tests on a federated model: for example, a clash test between heating, ventilation, and air conditioning (HVAC) and electrical trades, as shown in Figure 10.1. In the near future, the amount of time and effort required for clash detection will decrease with increased use of cloud-based collaboration platforms such as Autodesk BIM 360®, which allow users to connect project teams and data in real-time, from design through construction. So, stakeholders will no longer need to wait for a weekly design coordination meeting to check if there are any clashes with their system. They can check in real time, as they are modeling and other stakeholders are too, within a collaborative work environment. Figure 10.2 illustrates clash tests between all trades in a collaborative work environment. Cloud-based collaboration platforms will enable more efficient collaboration and can further enhance design coordination when coupled with automated or semi-automated routing systems, much as we have auto-complete and smart composition for our text messages and e-mails. Imagine working in a collaborative environment in which we not only see in real time what other trades are working on and designing, but also have systems in place that can automate routing and auto-correct clashes, leveraging recent advancements in generative design. Some of my research is motivated by this vision and will be discussed in this chapter. FIGURE 10.1 Example pair-wise clash test set up in Autodesk Navisworks Manage Source: Autodesk screen shots reprinted courtesy of Autodesk, Inc. FIGURE 10.2 Example of a design coordination collaborative environment in Autodesk BIM 360® Source: Autodesk screen shots reprinted courtesy of Autodesk, Inc. For three years (2015–2018), I co-chaired the Horizon-360 committee, which was part of an organization called Fully Integrated & Automated Technologies (Fiatech). Fiatech is now part of the Construction Industry Institute (CII); Horizon-360 still exists as a committee within CII. The original committee was composed of about a dozen individuals, all tech enthusiasts from both industry and academia. Horizon-360’s charge was to, simply put, scan the horizon for technologies in other industries in search of technologies that might impact the architecture, engineering, construction, and facility management (AECFM) industry in the next three decades. Out of this work, a list of emerging technologies in the AECFM industry was derived. Technologies in our scan included those with incremental impacts as well as those with potential breakthrough industry advancement. So far, the Horizon-360 team has identified 23 technologies available today that could impact the AECFM industry, ranging from exoskeletons to autonomous vehicles, to virtual reality(VR)/augmented reality(AR)/mixed reality(MR). Several of these technologies have the potential to change how we deliver projects as a whole, including how we manage the fragmented nature of our industry, and how we deal with the increasing shortage of high-quality craft labor by augmenting workers’ capabilities through technology. The technologies identified by the Horizon-360 team that can potentially more directly impact design coordination include the following: Each of these is discussed in more detail in the following subsections. BIM, or 3D modeling of facilities, has successfully mitigated many longstanding issues in the construction industry, such as design coordination between multiple complex trades, process optimization to minimize rework, and enhanced construction safety through better visualization of activities. Virtual prototyping, achieved by using an n-dimensional digital model to visualize the project design and construction processes, is a key contributor to these improvements. The successful implementation and beneficial results of BIM indicate that visualization is an appropriate solution for these challenges; however, there are still gaps between BIM-based virtual prototyping and real-world prototyping. Undetected issues in BIM, such as design errors that do not lead to physical clashes, still challenge construction professionals. We can help mitigate such issues with enhanced visualization technologies. In recent years, the construction industry has shown great interest in VR, AR, and MR. The idea of VR/AR/MR is to provide users with an immersive experience in a computer-generated environment. VR completely blocks out the real world, providing an immersive experience in the virtual world only. AR overlays the virtual world onto the real world based on specific software settings, such as location information. And MR overlays the virtual world onto the real world based on the technology’s understanding of the real world. In other words, MR is an enhanced AR, in which virtual objects are integrated into and responsive to the real world. These virtual experiences are enabled by use of head-mounted displays (HMDs) and multisensory input and output devices. For AR and MR, they can also be implemented in tablet computers and even smartphones, but new HMDs are also coming out that can implement VR, AR, and MR use cases. Although specific use cases vary, these immersive visualization technologies can provide project stakeholders with a much more immersive, interactive, and potentially even collaborative prototyping environment, as illustrated in Figures 10.3–10.5. My research team at the University of Texas at Austin has been working with the CII on a study identifying use cases that the heavy industrial sector can leverage that have measurable positive impacts in our projects. Early results of user tests for a design review use case are promising, with noticeable improvements in the number of design errors detected and recall of these errors a week after the tests were conducted by both our novice and expert groups in an immersive VR environment (using a HMD), when compared to a desktop-based VR environment. It is our hope that technologies such as VR/AR/MR can withstand the test of time if we focus our research efforts on identifying what use cases using these technologies actually help professionals in engineering and construction improve their work performance. With the wide adoption of mobile computing in the AECFM industry, we have now entered an era where information and data are ubiquitously generated and distributed, and, consequently, project organizations are facing information and data that are generated at high velocity, in large volumes, and in a great variety of formats. With the increasing amount of information and data generated in the life cycle of capital projects, information modeling has become a critical element in designing, engineering, constructing, and maintaining capital facilities (Leite et al. 2016). FIGURE 10.3 Virtual reality view of an industrial plant using a head-mounted display FIGURE 10.4 Design review session in virtual reality—missing support for a pipe spool FIGURE 10.5 Workface planner (left) discusses design errors with a designer (right)—missing fall-protection railing At the same time, we have been witnessing a significant shortage of high-quality craft labor in the construction industry. Karimi et al. (2018) investigated the impact of this shortage and concluded that projects that experienced craft shortages underwent higher cost growth compared with projects that did not. This is an issue that will continue to plague our industry if we do not rethink how we deliver projects. One approach is to use AI and train algorithms to learn from experiential knowledge of veteran workers; and then leverage this knowledge to train novices, augmenting workers with less field experience. This was the approach we adopted in one of my past research projects at the University of Texas at Austin. Specifically, we have been investigating how to capture tacit experiential knowledge in design coordination to train novices in carrying out this process. Decisions made and approaches taken in mechanical, electrical, and plumbing (MEP) design coordination largely depend on the knowledge and expertise of professionals from multiple disciplines. The MEP design coordinator—who usually represents the general contractor or the main mechanical contractor—coordinates the effort of collecting and identifying clashes and collisions between systems. They ask clarifying questions during coordination meetings and often propose solutions. During the process, the coordinator’s tacit and experiential knowledge frequently is called upon and transferred to less-experienced members of the team. In recent years, the design coordinator usually was an experienced engineer who knew how to differentiate between critical and noncritical clashes, as well as how to prioritize clashes by importance and provide suggestions to the team—or even make decisions, based upon their expertise and experience. But increasingly, due to the recession’s depletion of the ranks of veteran engineers from the United States industry, as well as the rising use of BIM, general contractors have started to rely more and more on novice engineers to run conflict resolution sessions. Although young engineers may be proficient in operating the coordination software systems, many have limited practical experience in MEP design and coordination. While the use of BIM in MEP design coordination has greatly increased the amount and quality of available data, significant experiential knowledge still is needed for efficient, high-quality decision-making; yet the process for bringing that knowledge to the table is faltering. We have conducted a study comparing the behaviors of experienced MEP coordinators with novices during model-based design coordination. Results show that experienced coordinators can locate relevant information and identify external information sources more efficiently, as compared to novice coordinators. Experienced coordinators also are able to perform more in-depth analysis within the model (Wang and Leite 2014a, 2014b). My team has been investigating whether novices’ performance on coordination tasks improves when experiential knowledge that has been extracted from past projects is made available to them through a software-enabled decision support system. Results show that such decision support significantly reduces the time spent on performing design coordination tasks and brings increased accuracy to clash resolutions. With this vision of capturing experiential knowledge to train novice designers in mind, we have developed an approach to capture, represent, and formalize experiential knowledge in design coordination to inform better design decisions, improve collaboration efficiency, and train novice designers/engineers (Wang and Leite 2016). The approach systematically captures expert decisions during design coordination in an object-oriented, computer-interpretable manner and leverages database and machine learning techniques for knowledge reuse. We developed a prototype system called TagPlus (illustrated in Figure 10.6) that works as a plug-in for a widely used design coordination software system, Autodesk Navisworks®. It captures design coordination decisions and stores each instance directly to related 3D objects. We then store this information in a database of MEP clashes and related expert solution descriptions and use the information to train algorithms to learn from the knowledge (as illustrated in Figure 10.7) and, ultimately, provide novice designers with a problem-based learning platform to enhance their performance in design coordination tasks. TagPlus is described in detail in Wang and Leite (2015). Tests with novices showed great potential for the knowledge-embedded approach. However, additional data is needed for a more in-depth analysis. Based on feedback from participants and direct observations in our experimental studies with novices, the information provided by the decision support system helped them understand the clashes more efficiently and effectively. Here are some example responses: “It made it easier to understand the clashes,” “I feel design intent and constraints are apt parameters in bringing in spatial and temporal context,” “The suggestions were clear and usually correct and helped in making a decision,” and “It saves time in providing all the information about the clash clearly.” The decision support system also helped participants form a more organized structure to document clashes and solutions and facilitated wider consideration by including multiple factors (such as design intent and constraints) during the decision-making process. FIGURE 10.6 TagPlus prototype system to capture expert experiential knowledge in BIM-based MEP design coordination In summary, our current design coordination research results show the average time spent per clash is significantly reduced when decision support is available; however, the accuracy of the predicted results still needs to be improved. These results illustrate how decision support can impact novices’ performance and also shed light on the focus for future improvements in knowledge-embedded decision support systems. This research is just the start of what could potentially lead to automated or semi-automated design coordination. Infrastructure and buildings are designed to have long, useful life-spans on the order of decades. Many buildings in the world are still in operation after centuries, amid numerous renovation efforts. This long operational phase represents the majority of a building’s life cycle, yet information related to operations and maintenance (O&M) as well as renovations is rarely kept up-to-date, even if the facilities themselves are dynamic in nature and as-built conditions frequently change. This is a major challenge in design coordination in retrofit projects. Lack of up-to-date as-built information impacts decisions made during O&M, increasing costs for searching, validating, and/or re-creating facility information that was supposed to be already available (Fallon and Palmer 2007). Gallaher et al. (2004) estimated that O&M personnel spend an annual cost of $4.8 billion in the United States capital facilities industry verifying that documentation accurately represents as-is conditions, and another $613 million converting that information into a usable format. A database and a data model are the best practice for preserving such information over a structure’s life cycle, and the rapidly adopted use of building information models potentially could have been the ideal solution. Unfortunately, building information models are currently mostly used for project management purposes during the construction phase. FIGURE 10.7 Knowledge formalization and reuse in design coordination The reason behind the lack of widespread adoption of building information models for O&M is the enormous undertaking of updating project models for every single change that occurs. Manually updating the models over a structure’s long life cycle is cumbersome and extremely error-prone. Hence, human operators and contractors are often unwilling and negligent in keeping the building information models up-to-date. Even if the participants were willing, it is difficult to determine whether the updates are correct and/or any information is missing. Hence, computerized automation of the process seems to be essential in order for such information to be useful, timely, and accurate. My team at the University of Texas at Austin, along with colleagues at Drexel University (James Lo and Ko Nishino), are working on a National Science Foundation project called LivingBIM. This research aims to demonstrate how automatic and continuous updates of BIM in a given structure are possible and how such updates can be of benefit to the long life cycle of facilities and structures. To help automate this process, computer vision is used to sense the environment and to provide decision-making inputs for updating the BIM database. Computer vision in an open world encounters an extremely challenging problem: identifying a detected object. It can be argued that in the situation of a built environment, the expected objects are less dynamic in variety; better yet, the BIM database itself can be used as a resource to provide contextual identification for a detected object. With the complexity of object identification reduced, the iterative process of BIM updating via machine vision over a long period of time can train the machine vision process itself continuously, which can then improve the quality of detection and reduce the possibility of false positives and other errors. Our team has designed a new building point cloud segmentation method and has begun creating and curating a training dataset in order to eventually apply deep learning methods to the problem of semantic segmentation of building system scans (Figures 10.8 and 10.9). We have begun to explore what content could be provided by computer vision. We decided on deep learning as the avenue for data processing as these emerging semantic segmentation methods have demonstrated a level of unprecedented versatility. Rather than hand engineering an algorithm to identify a single type of object in a few types of scenarios, deep learning holds the promise of identifying many different types of objects in a similarly diverse range of scenarios. Unfortunately, there is no existing dataset whereby deep learning networks can be trained to classify or semantically segment building systems. Our team has been exploring different possibilities for how such a dataset can be created. Synthetic RGB-D (color + depth) images could be generated using 3D computer modeling and photorealistic rendering. Scans could be collected and then manually annotated. In the process of manually annotating scans, we developed a new 6D DBSCAN based approach to segmenting point clouds as a preprocessing step to manually grouping clusters into semantically meaningful groups (Czerniawski et al. 2018). FIGURE 10.8 3D reconstruction created using a commodity range camera depicting part of a building facility; (a) the original scan with captured color texture; (b) scan pre-segmented using the 6D DBSCAN-based segmentation method; and (c) scan semantically segmented FIGURE 10.9 2D images collected using a commodity range camera depicting part of a building facility; (a) RGB color channels; (b) depth channel; (c) semantic segmentation of the 2D images Once a sufficiently large dataset has been created, we will move on to training neural networks to semantically segment scans, and then ultimately use those segmented scans to perform automated 3D modeling. Our initial annotated dataset for 3D reconstructions of building facilities, which we call 3DFacilities, is presented in detail in Czerniawski and Leite (2018). The dataset currently contains over 11,000 individual RGB-D frames comprising 50 annotated scene reconstructions. It is our hope that this database, leveraging the success of deep learning, will contribute to the scan-to-BIM research community. Many new technologies and processes being implemented or piloted in the construction industry involve some form of digital information. We are now able to collect more data at lower costs than ever before. The problem is that we are drowning in data. We need to better integrate new data and develop innovative computing approaches to reason about the sea of digital data. In other words, we need to be able to take advantage of advances in computer science, such as AI, to automate processes and better use data analytics. And to fully take advantage of big data analytics, we need to enable algorithms to analyze data across systems. Hence, data interoperability is one of the largest barriers to achieve the complete vision of digital transformation. All of this in an industry that is known for being a slow adopter of new technology and, according to McKinsey & Company (Agarwal et al. 2016), invests less than 1% of revenues in research and development, versus 3.5% and 4.5% in the auto and aerospace industries, respectively. With more complex projects, we need to face this challenge head-on and commit as an industry to invest in a digital future, which includes data capture, modeling, and integration that will enable next-generation data analytics. This chapter discussed a vision for the future of design coordination. Three technologies were discussed: These three points together have the power to transform design coordination as a whole. VR/AR/MR is changing how we interact with the virtual world, assisting stakeholders in better understanding their scope of work by immersing them in the modeling environment. The next step in the evolution of design coordination is to harness engineering knowledge enabling adaptive collaboration between humans and machines: in other words, having machines help humans size and route systems, while ensuring that they are clash free, possibly using recent advancements in generative design. In order to achieve this, we need to ensure that the most accurate model information is available—all in an era in which we are witnessing a digital transformation in our industry, which can catalyze the vision of automated design coordination. As discussed, in the near future, the amount of time and effort required for clash detection will decrease with increased use of cloud-based collaboration platforms, which will enable more efficient collaboration and can further enhance design coordination when coupled with automated or semi-automated routing systems, much as we have auto-complete and smart composition for our text messages and e-mails. Envision working in a collaborative environment in which we not only see in real time what other trades are working on and designing, but have systems in place that can automate routing and autocorrect clashes. This would enable design coordination as a process to be done much more efficiently and effectively, and to be more seamlessly integrated into the actual design process. Hence, we would be doing less retroactive coordination and more truly collaborative design.

Chapter 10

What the Future Holds for Design Coordination

10.0 Executive Summary

10.1 Introduction

10.2 Emerging Technologies for Design Coordination

10.2.1 Virtual, Augmented, and Mixed Reality

10.2.2 Artificial Intelligence in Support of Automated Design Coordination

10.2.3 Computer Vision and Deep Learning in Support of Automated Model Updates

10.3 Digital Transformation of the AECFM Industry

10.4 Summary and Discussion Points

References